Setting Up A Raspberry Pi for Data Engineering and Virtualization Projects

Introduction

Building your own Raspberry Pi server is an excellent way to gain practical experience with Linux systems, networking, and server administration. This hands-on project will teach you valuable skills applicable to larger-scale environments while giving you a reliable platform for personal projects. Whether you’re a student, hobbyist, or IT professional looking to expand your knowledge, this guide will walk you through creating a robust, secure server environment from scratch.

This guide provides step-by-step instructions and explanations for configuring a Raspberry Pi 4 to learn about hardware, servers, containerization, and self-hosting principles. To be clear, this guide is not exhaustive, and I’m sure there were areas where I made mistakes or misunderstood topics. I’m encouraging you to let me know if you find any issues! You can submit feedback via GitHub on the guide on my website.

The primary purpose of this guide is to help me reference what I previously did and understand my thought process when I need to troubleshoot or recreate something. The secondary purpose is to provide a helpful resource for others in similar situations, as I struggled to find the comprehensive document I needed when starting this journey.

Eventually, I’d like to set up an actual server cluster and self-host some interesting, more resource-intensive applications. Before making that kind of commitment, I wanted to learn the basics and see if this was something I would enjoy—the good news is that I learned I do. The great news is that Raspberry Pi makes their hardware very affordable and easy to purchase. Here’s the official webpage for the exact computer I bought.

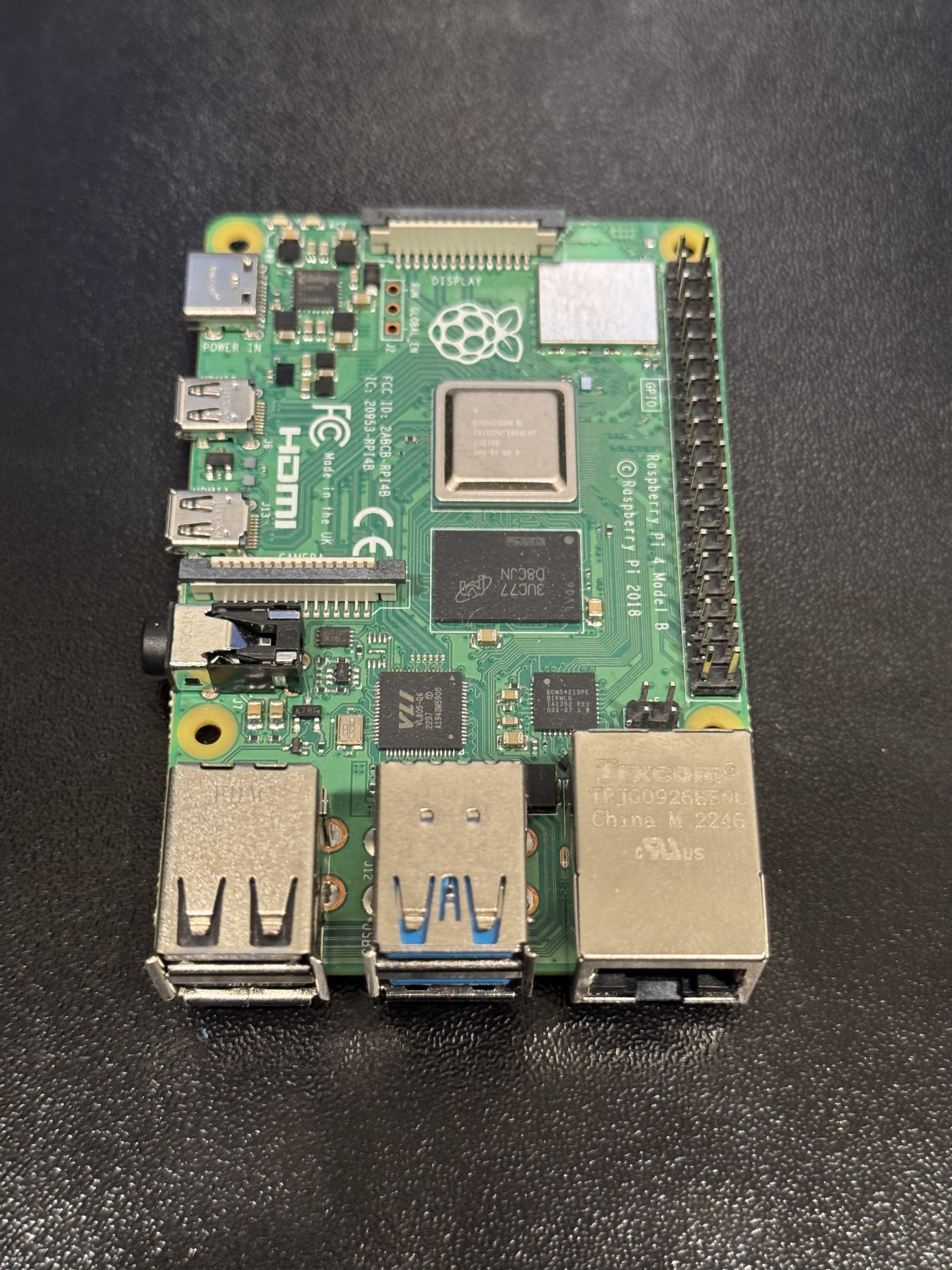

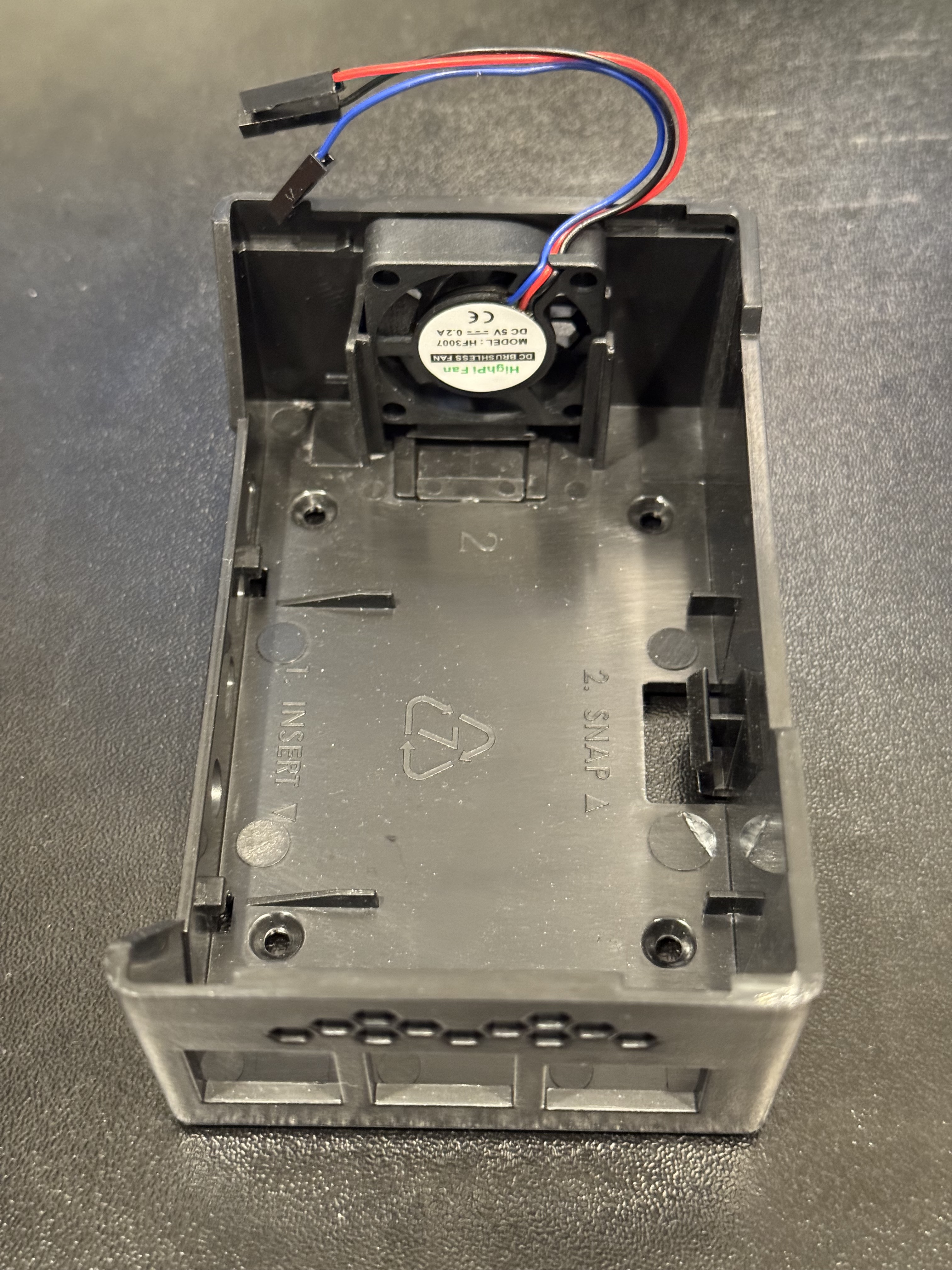

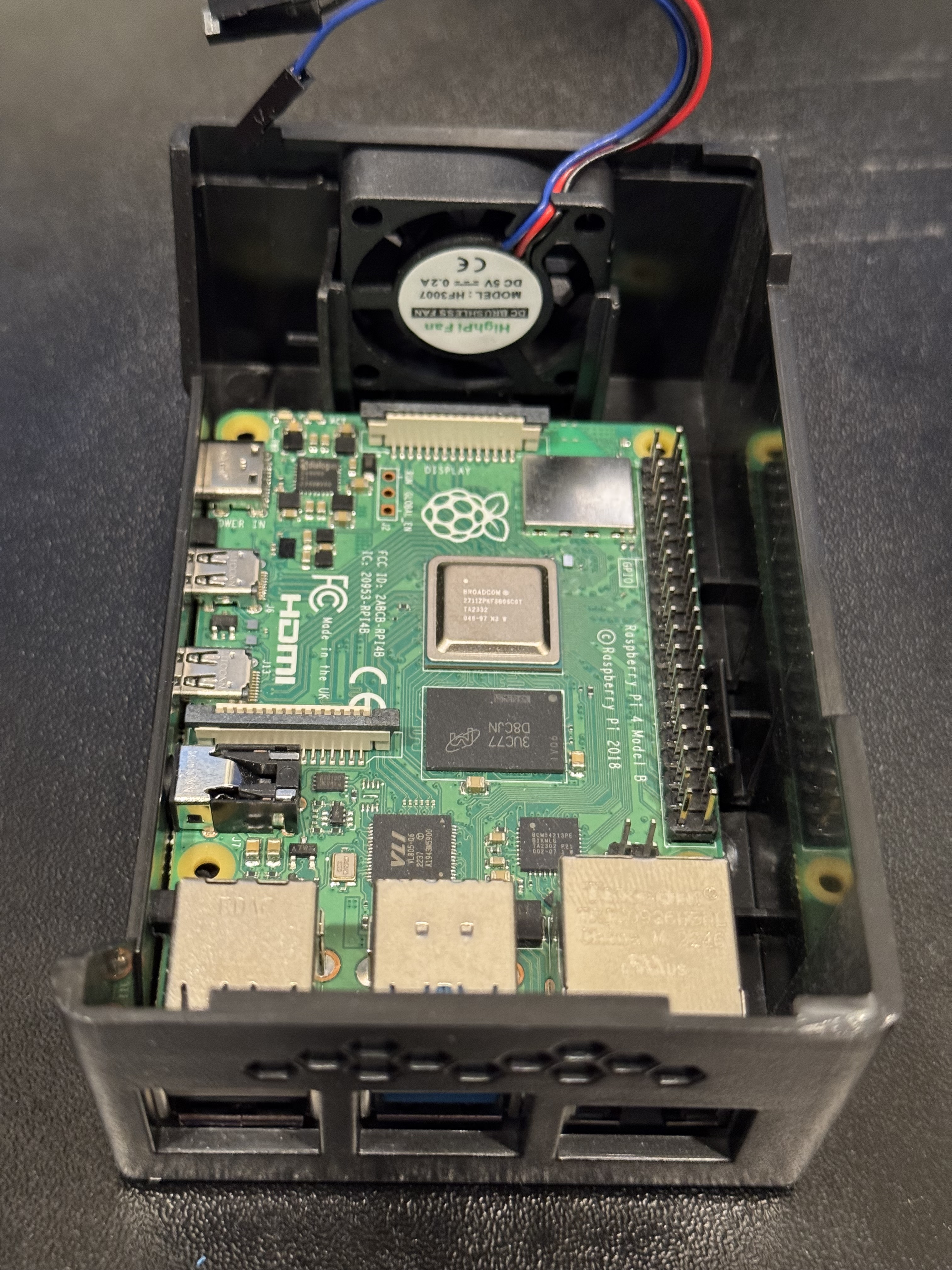

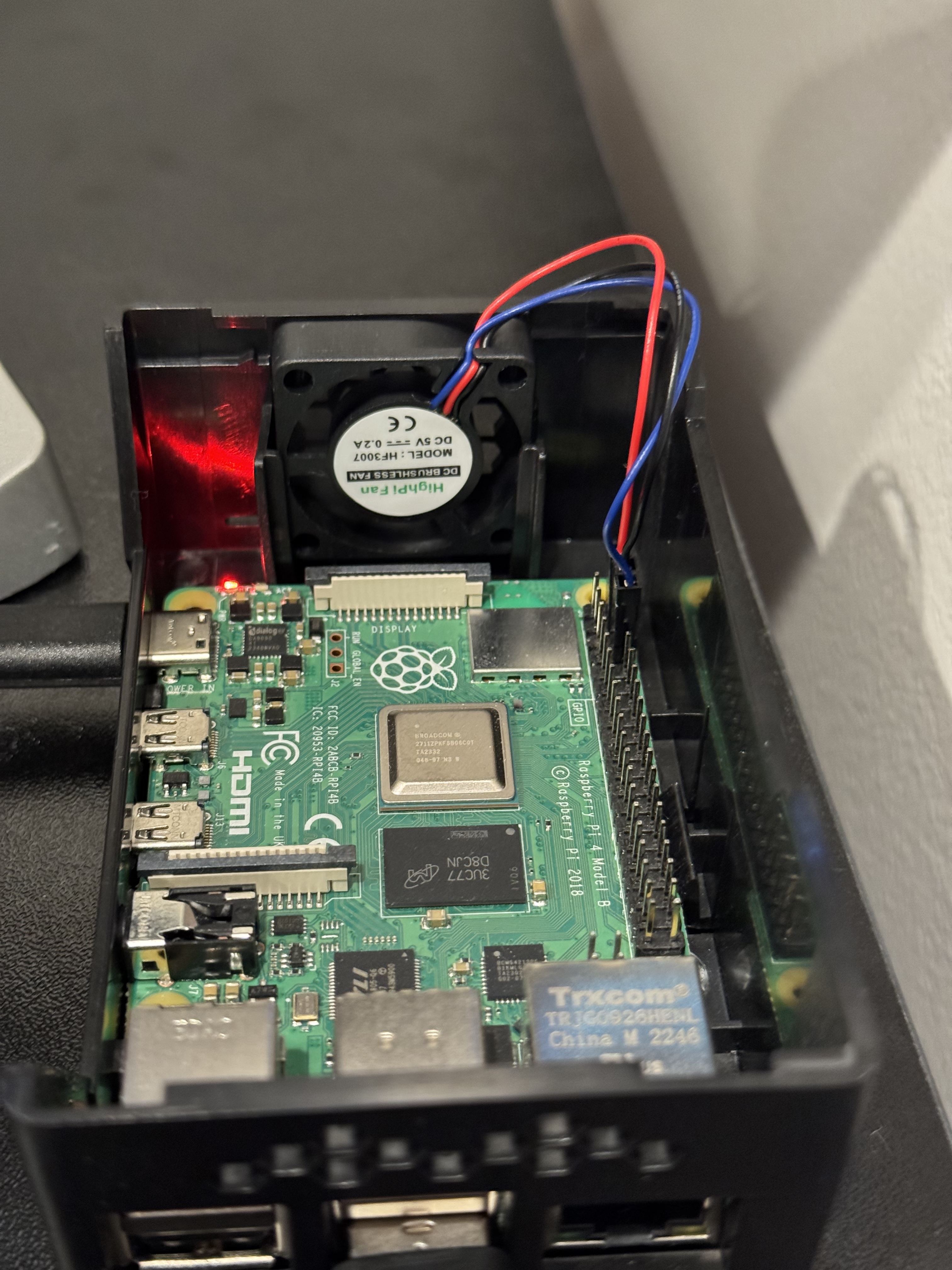

I purchased the 8GB Raspberry Pi 4 model. The price difference isn’t that significant compared to the lesser 2GB and 4GB models, but the performance improvement is substantial. Additionally, because I’m planning to host and experiment with CI/CD, I also bought a case and cooling fan to help with longevity. All in, the base price (including the power supply and HDMI cable) is $107.30 before taxes, shipping, and other fees.

Below you’ll find an outline that provides a general idea of what we’ll be covering and in what order. At the start of each section, I’ll include a key terms list that covers the fundamental concepts important for that topic.

Guide Outline

1. Introduction

- Purpose and scope of the guide

- What you’ll learn and build

- Prerequisites and assumptions

2. Initial Setup

- Hardware Requirements

- Raspberry Pi 4 specifications

- Storage devices (Thumbdrive, SSD, microSD cards)

- Accessories and peripherals (Keyboard, monitor, etc.)

- Image Requirements

- Selecting and downloading Ubuntu Server LTS

- Using Raspberry Pi Imager

- Initial configuration options

- Getting Started

- The physical setup of the Raspberry Pi

- What to expect during first boot

3. Linux Server Basics

- First Boot Process

- Connection and startup

- Understanding initialization

- Service Management with systemd

- Understanding systemd units and targets

- Basic service commands

- Understanding Your Home Directory

- Shell configuration files

- Hidden application directories

- The Root Filesystem

- Filesystem Hierarchy Standard (FHS)

- Key directories and their purposes

- User and Group Permissions

- Basic permission concepts

- chmod and chown usage

- Understanding advanced permissions

4. Networking Basics

- Computer Networking Concepts

- OSI and TCP/IP models

- Key networking protocols

- Network Connections

- Wired vs wireless configurations

- Understanding IP addressing

- Ubuntu Server Networking Tools

- Testing connectivity

- Viewing network statistics

- systemd-networkd

- Configuration file structure

- Wired and wireless setup

- Converting Netplan to networkd

- Why and how to transition

- Troubleshooting network issues

- Advanced Networking

- Subnets and routing

- Security considerations

5. SSH (Secure Shell)

- SSH Basics

- Client vs server setup

- Key-based authentication

- Key-Based Authentication

- Types of SSH key encryption

- Generating keys

- Installing the public key

- Server-Side SSH Configuration

- Host keys and security options

- Optimizing for security

- Client-Side Configuration

- Setting up SSH config

- Managing known hosts

- Additional Security Measures

- Firewall configuration with UFW

- Intrusion prevention with Fail2Ban

- Secure File Transfers

- Using SCP (Secure Copy Protocol)

- Using rsync for efficient transfers

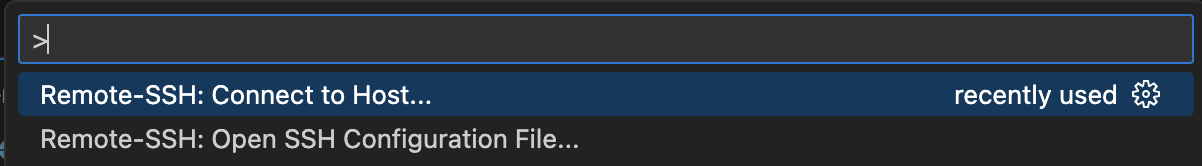

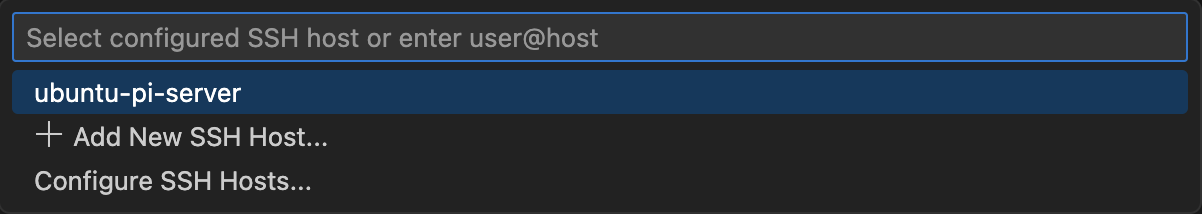

6. Remote Development with VS Code

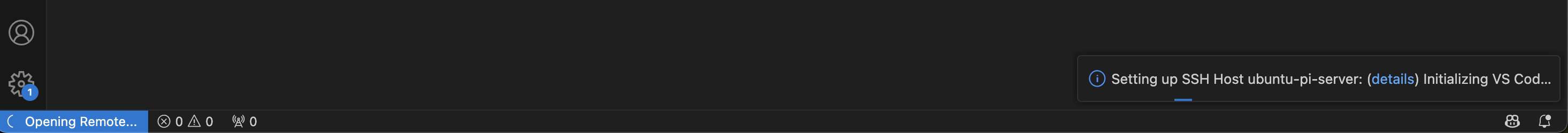

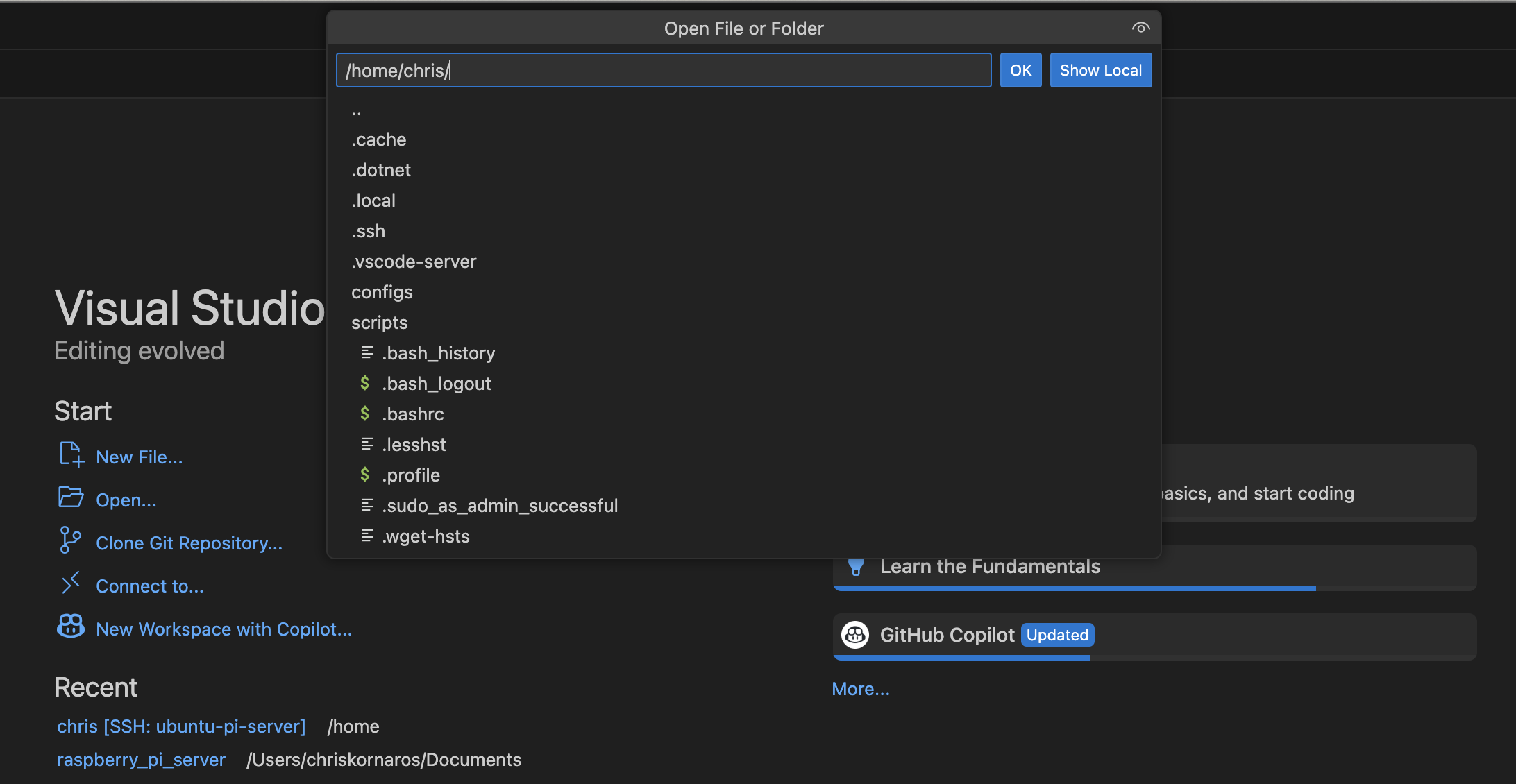

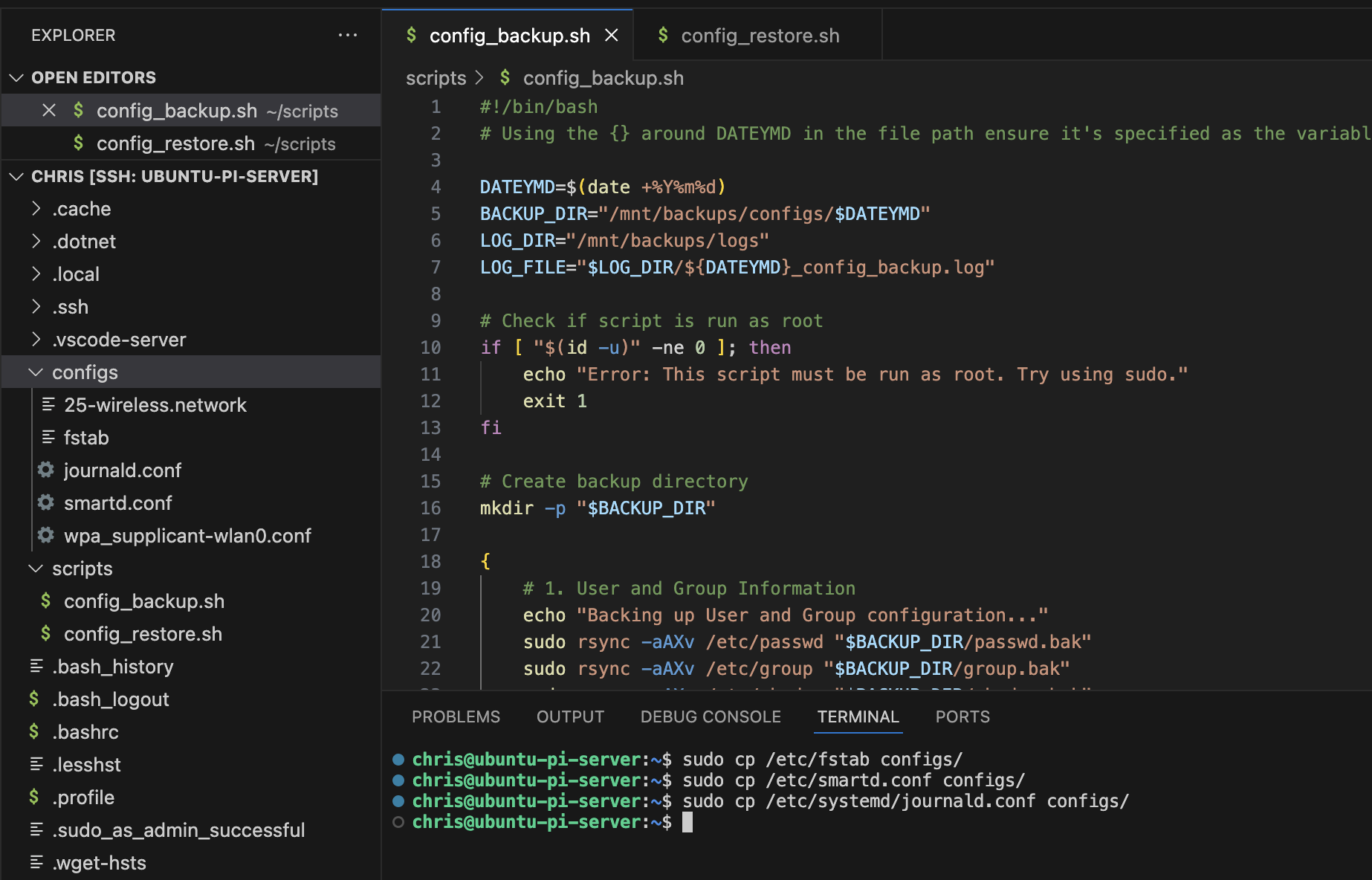

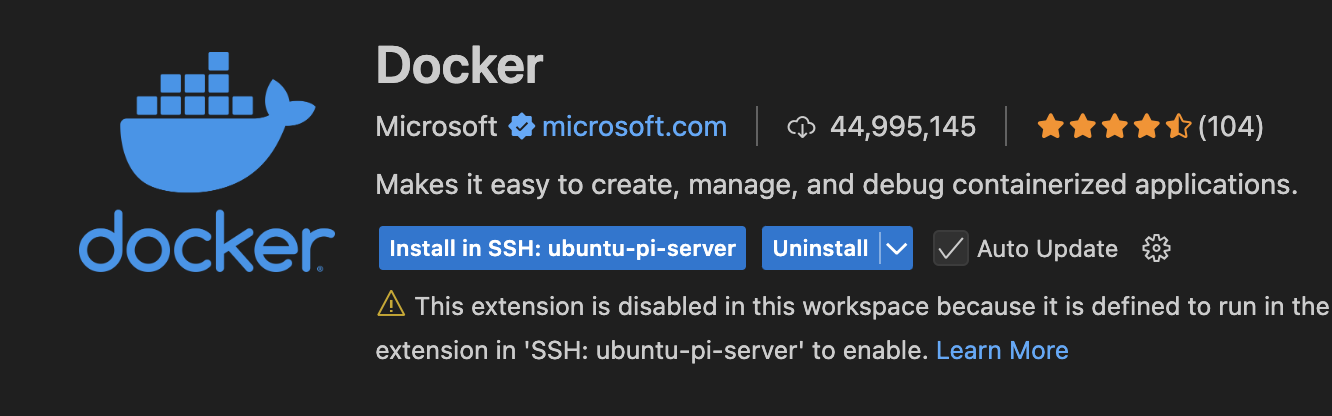

- Setting Up VS Code Remote SSH

- Managing Remote Projects

- Debugging and Terminal Integration

7. Partitions

- Partitioning Basics

- Understanding partition tables and types

- Filesystem options and considerations

- Partitioning Tools

- Using parted and other utilities

- Partitioning for Backups

- Setting up microSD cards

- Mount points and fstab configuration

- Partitioning your SSD

- Boot and root partitions

- Formatting and preparation

- Advanced Partitioning

- Monitoring usage

- Resizing partitions

8. Backups and Basic Automation

- Backup Basics

- Directory structure and permissions

- Configuration Files Backup

- Using rsync for system configurations

- Remote transfers of backups

- Restoring from Backup

- Creating restoration scripts

- Testing recovery procedures

- Automating Backups with Crontab

- Creating a schedule for scripts

- Verifying the schedule

9. Changing Your Boot Media Device

- Boot Configuration Transition

- Flashing OS to new media

- Proper shutdown procedures

- Physically changing boot devices

- Testing the new boot configuration

- Restoring configurations

10. Monitoring and Maintenance

- Monitoring Basics

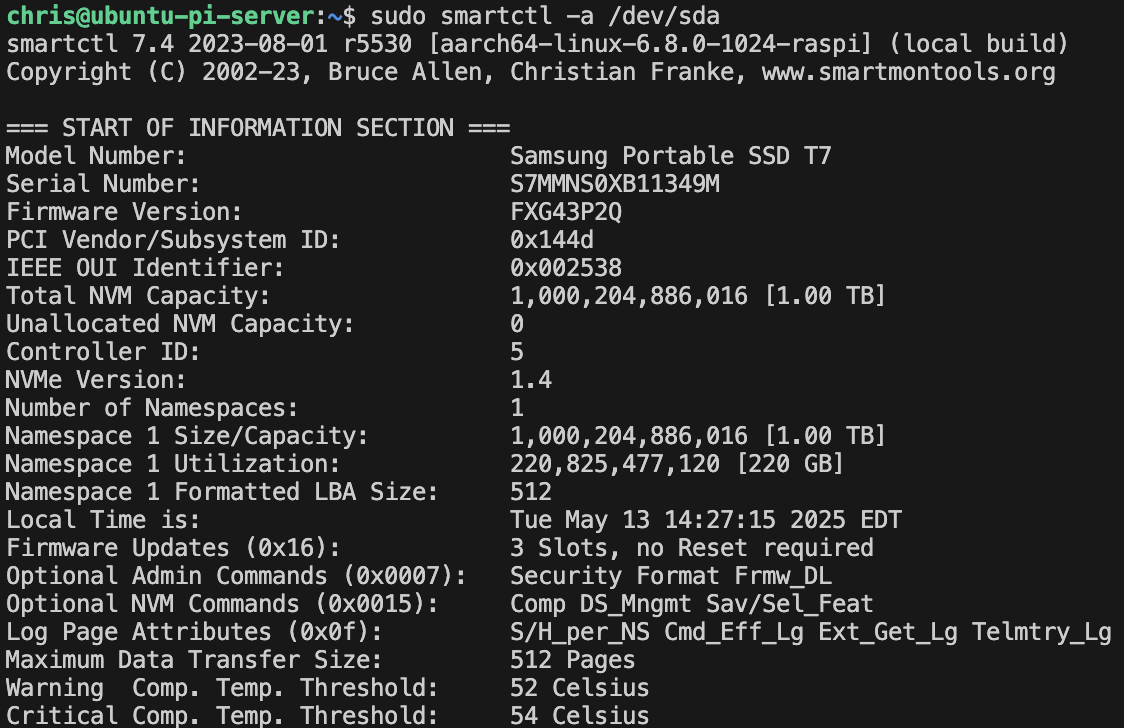

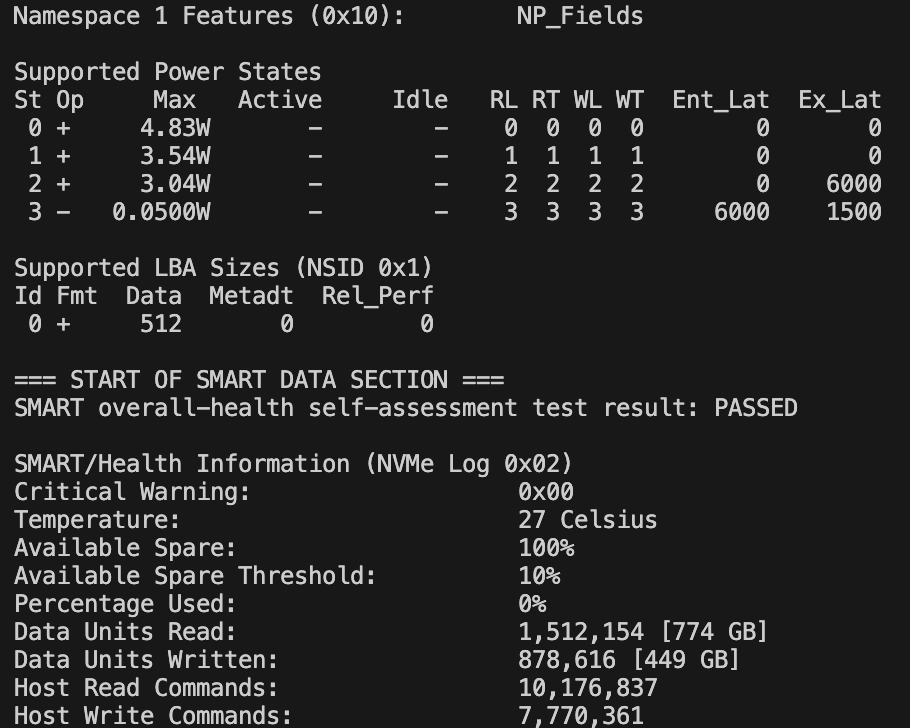

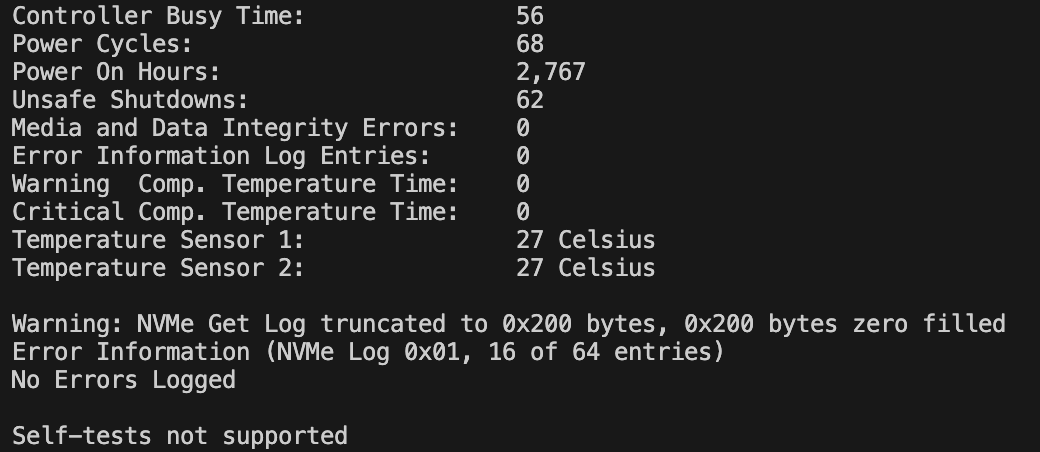

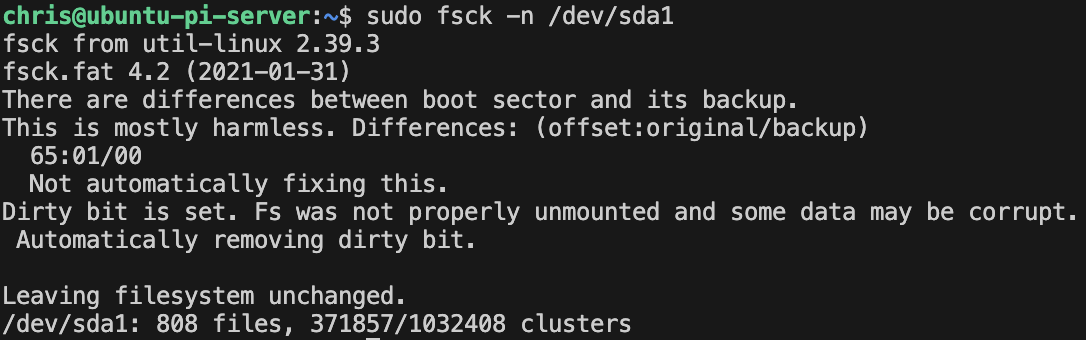

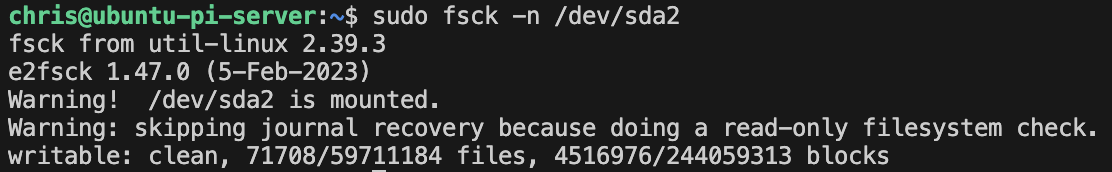

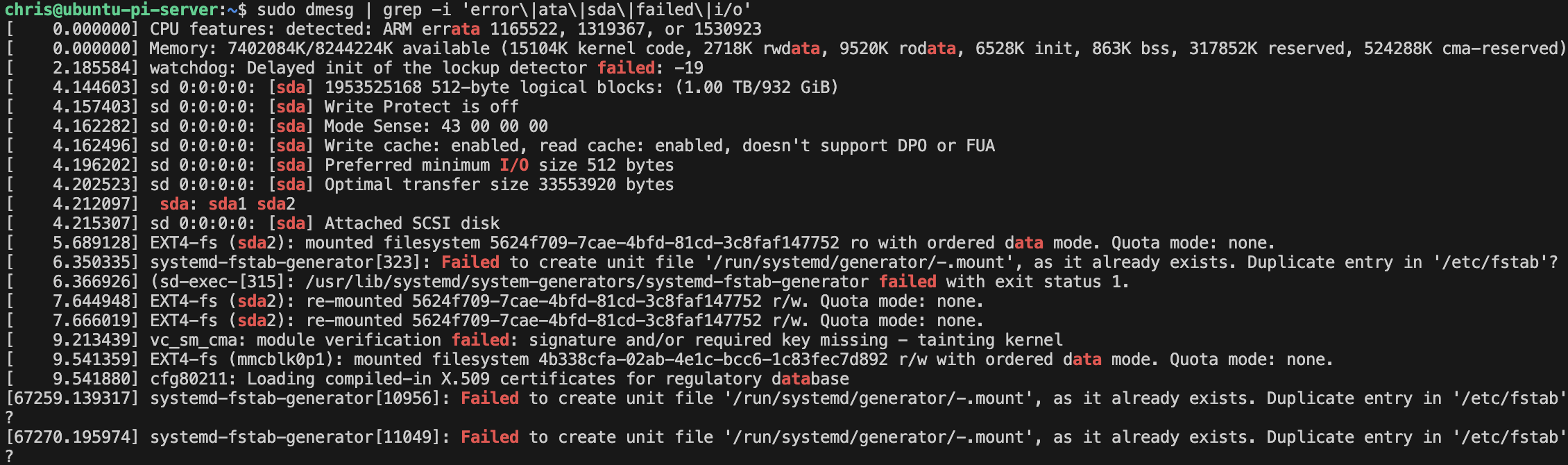

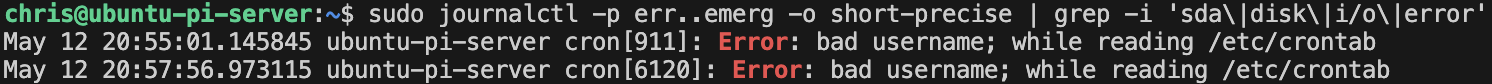

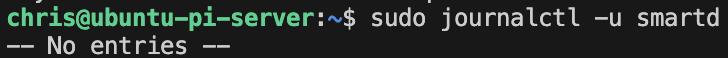

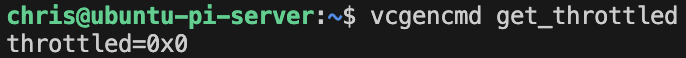

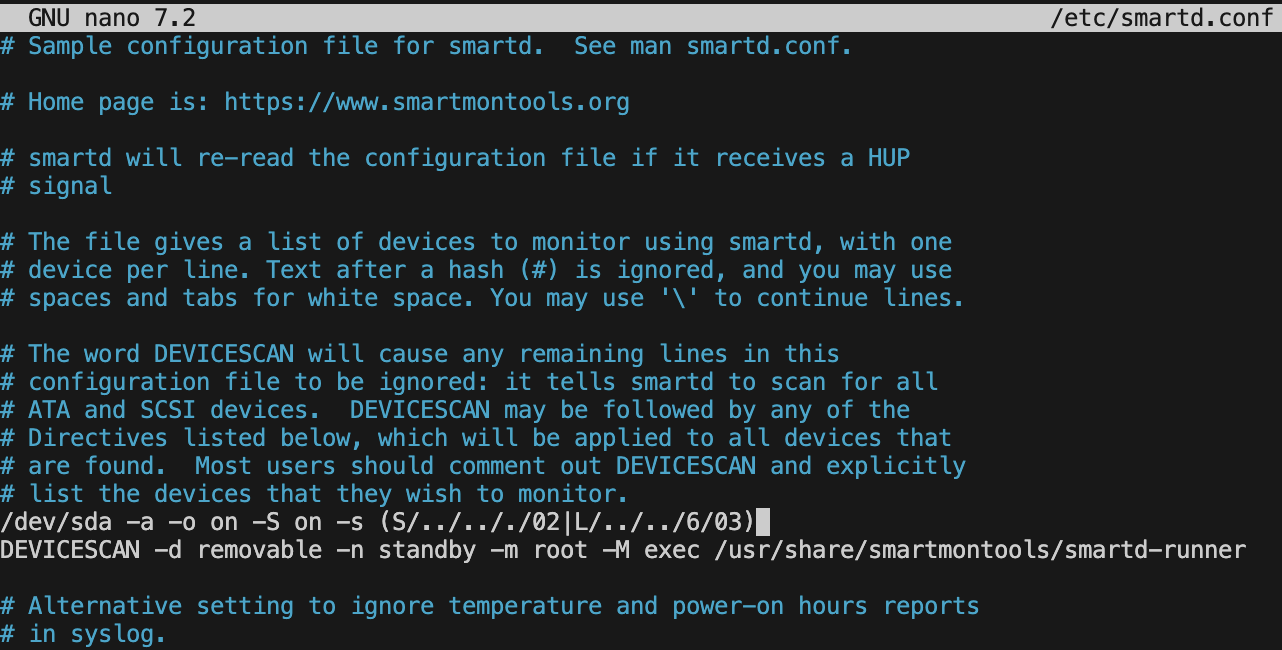

smartmon,vcgencmd- Resolving SSD health issues

- Security Updates and Patching

- Preventive Measures

- System patching schedules

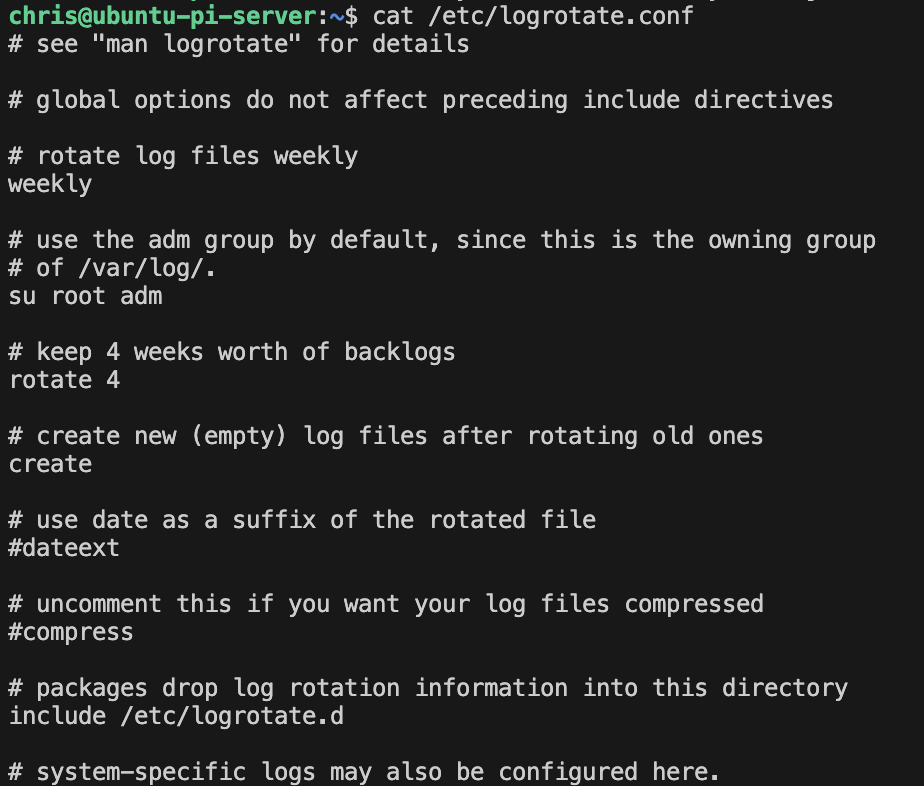

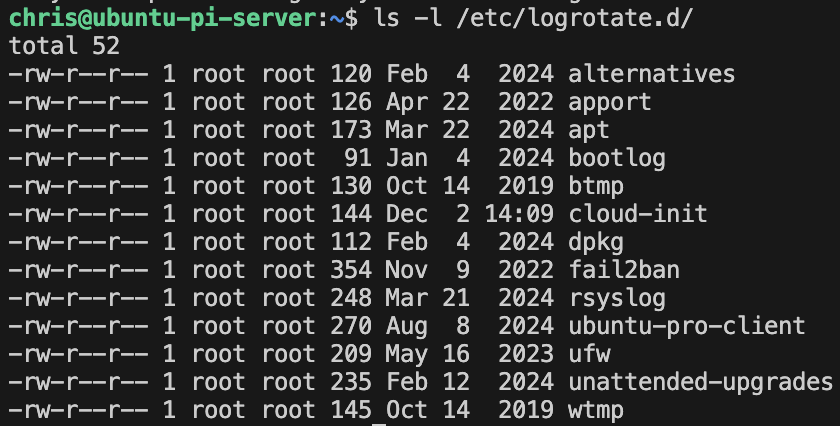

- Log Management

- Log basics

- Management tools and strategy

Initial Setup

Key Terms

Hardware Terminology:

- Raspberry Pi 4: A single-board computer developed by the Raspberry Pi Foundation, featuring a Broadcom BCM2711 processor, various RAM options, USB 3.0 ports, and GPIO pins.

- SoC (System on Chip): An integrated circuit that combines the components of a computer or electronic system into a single chip.

- GPIO (General Purpose Input/Output): Programmable pins on the Raspberry Pi that allow interaction with physical components and sensors.

- SSD (Solid State Drive): Storage device using flash memory that offers faster access times and better reliability than traditional hard drives.

- Boot Media: The storage device containing the operating system files from which the computer starts.

- USB 3.0: A USB standard offering data transfer speeds up to 5 Gbps, significantly faster than previous versions.

- microSD Card: A small form factor removable flash memory card used as storage media.

- eMMC (embedded MultiMediaCard): An integrated flash storage solution often found in compact devices.

Software and Imaging Terminology:

- Ubuntu Server LTS: A long-term support version of Ubuntu’s server operating system, maintaining security updates for 5 years.

- Raspberry Pi Imager: Official software tool for flashing operating system images to SD cards and other storage devices.

- Image: A file containing the complete contents and structure of a storage device or filesystem.

- Flashing: The process of writing an operating system image to a storage device.

- Headless Setup: Configuring a device to operate without a monitor, keyboard, or mouse.

- Public-key Authentication: An authentication method using cryptographic key pairs instead of passwords.

First Boot Terminology:

- Cloud-Init: A utility used by Ubuntu Server to handle early initialization when a server instance boots for the first time.

- First Boot Experience: The initial setup process that occurs the first time an operating system is booted.

- Initial RAM Disk (initrd): A temporary root filesystem loaded into memory during the boot process.

- Bootloader: Software that loads the operating system kernel into memory.

Hardware Requirements

This section provides basic setup instructions, so you’ll have the same tools I do and can follow along with this guide step-by-step.

- Raspberry Pi 4 8GB

- Micro HDMI to HDMI cord (for direct access)

- Protective case

- Cooling fan

- Appropriate Power Supply (use an officially suggested one)

- Keyboard (connected via USB for direct access)

- Monitor (for direct access)

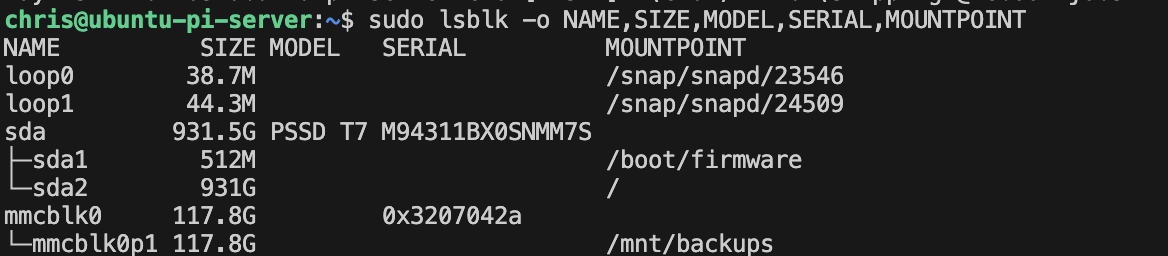

- 1TB Samsung T7 SSD (connected via USB for boot media/core memory)

- 64GB Generic Flash Drive (used as the boot media when partitioning the SSD)

- Amazon Basics 128GB microSD card (or other microSD cards for backups media)

- SSH-capable devices for headless access

- I’m using a MacBook Air

- I prefer macOS and Terminal for personal development, because I use Windows at my day job

Image Requirements

Once you have your hardware ready, you can begin setting up the software. I’m using Ubuntu Server LTS because it’s a stable version of Linux intended for headless, server environments. LTS means long-term support, so unlike the more frequently updated versions, these OS versions are supported for 5 years. Additionally, you’ll want to use public-key authentication for better security purposes, but more on that in the SSH Section.

Have your Thumb Drive ready and able to connect to either a laptop or desktop (whichever you plan to use with SSH)

Download Raspberry Pi Imager from the official website

Run the Imager and configure your installation of the most recent Ubuntu Server LTS image

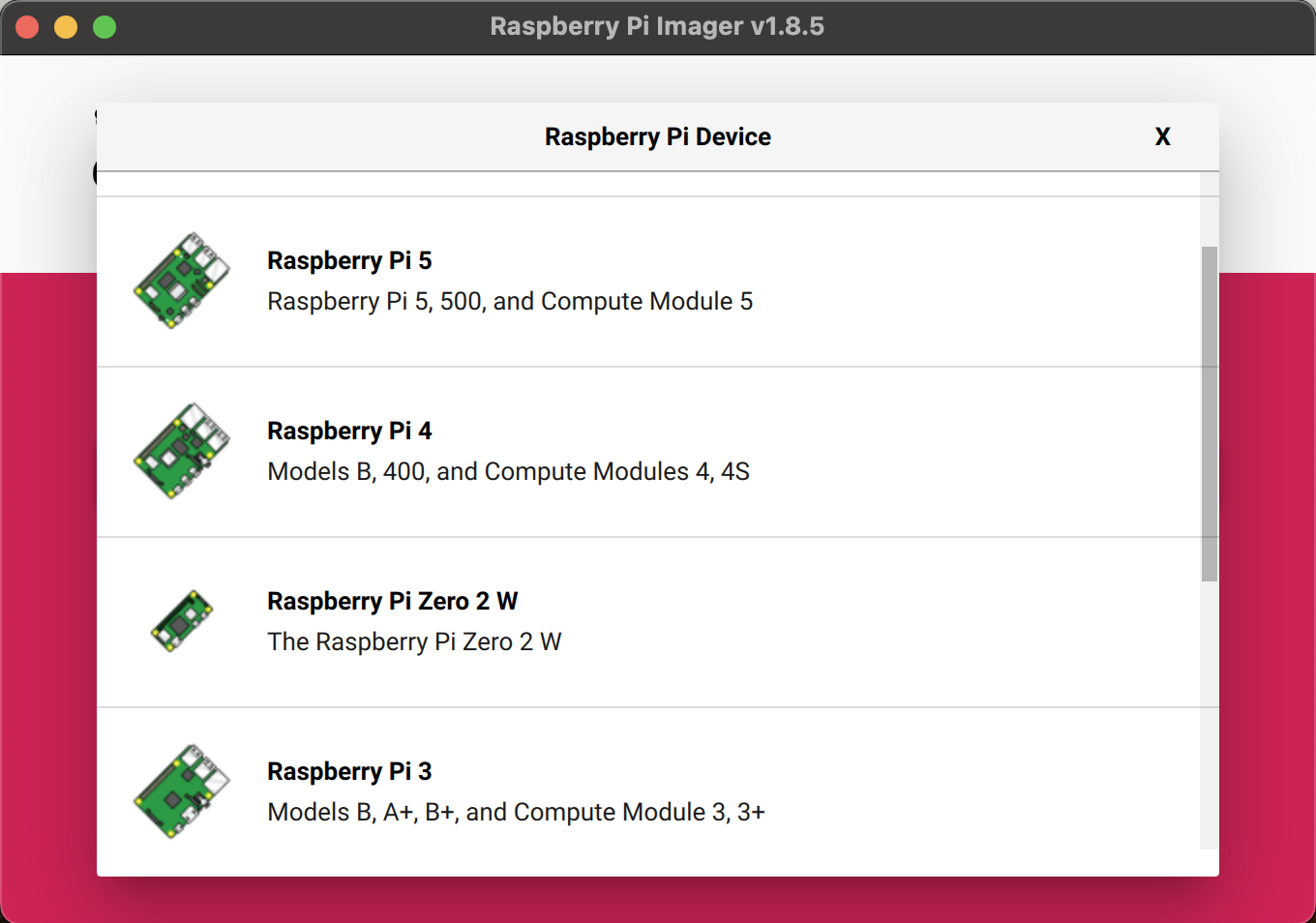

- Select your Raspberry Pi device

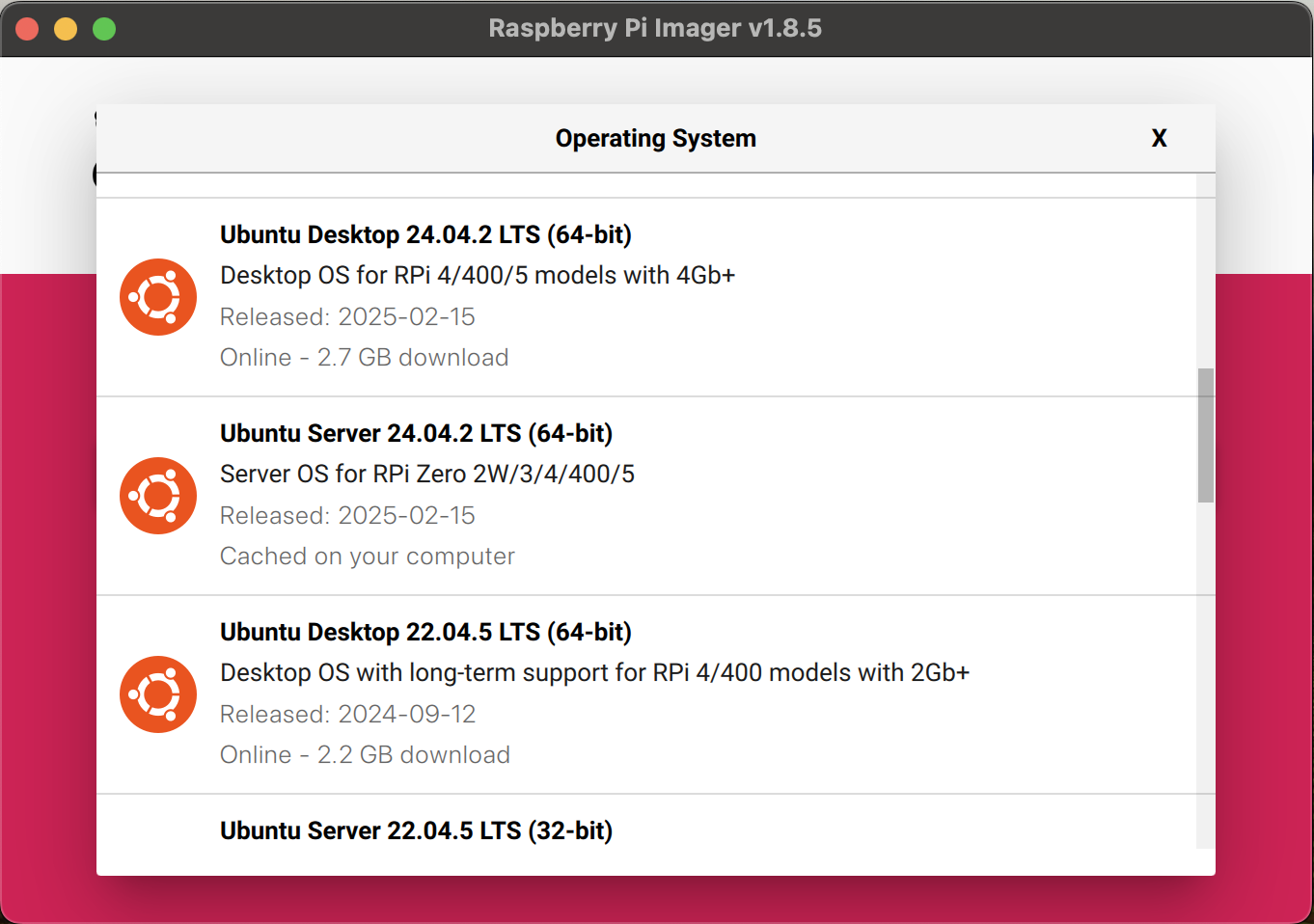

- Select the OS Image you want to flash

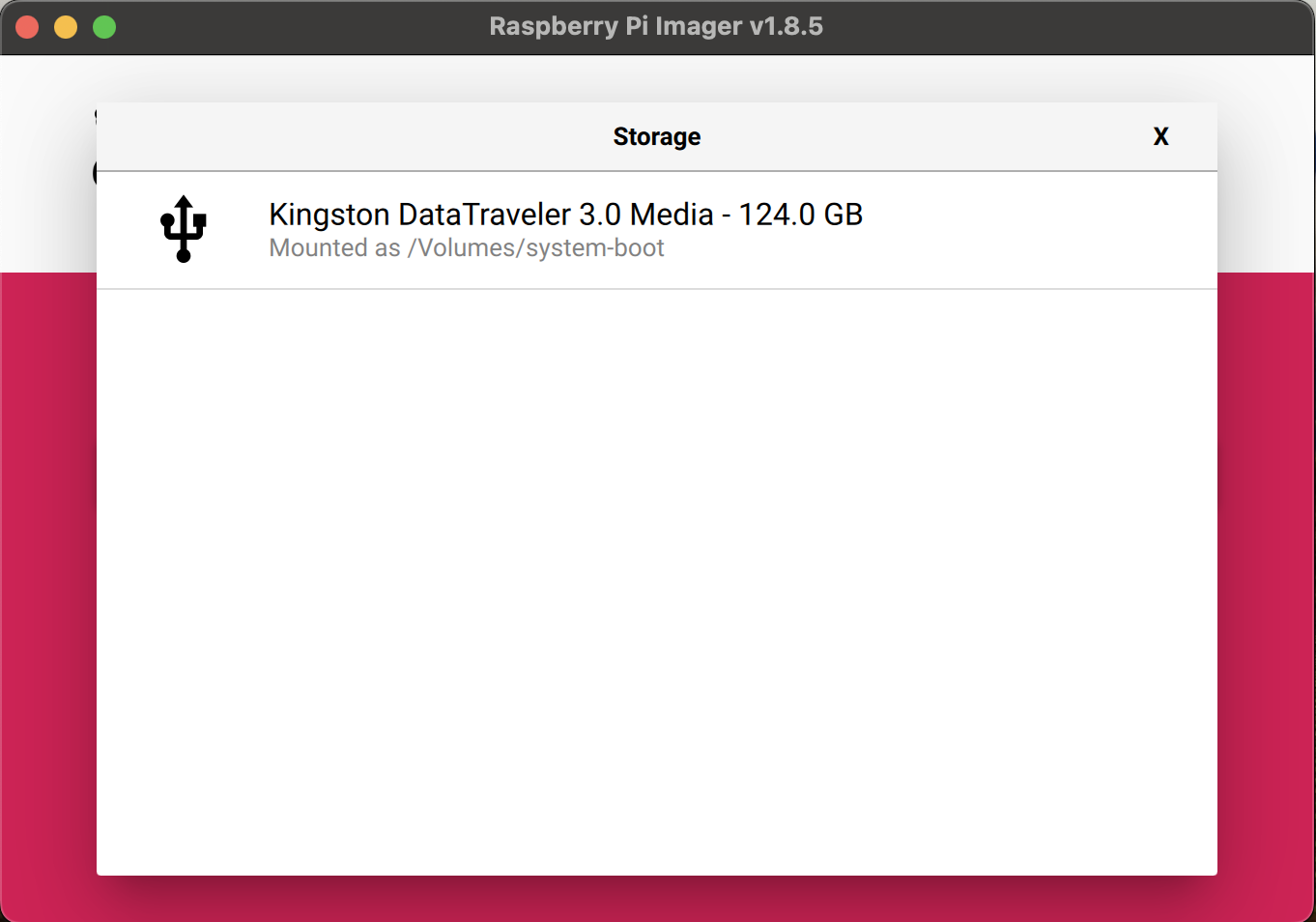

- Select the media storage device for the image

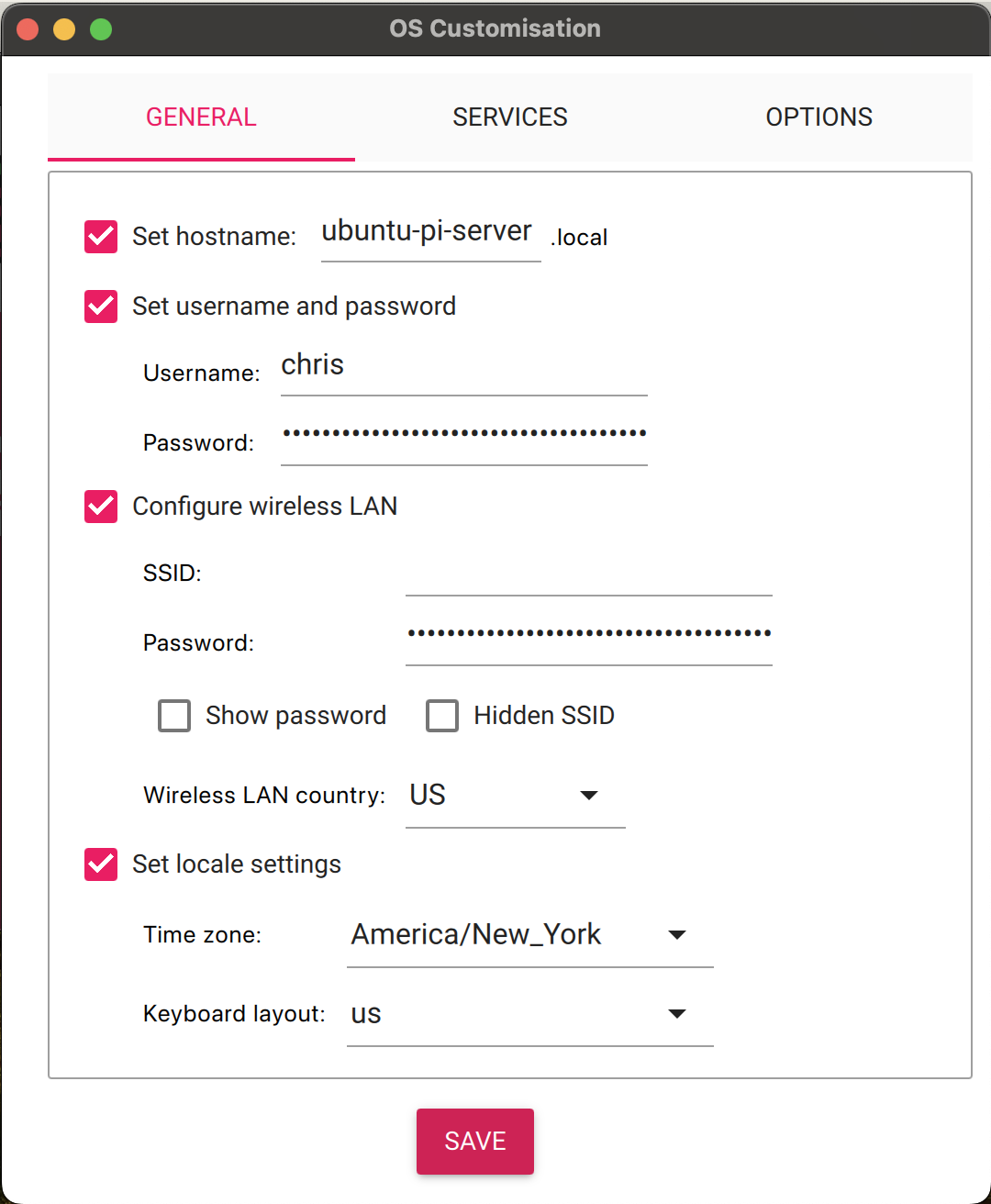

- Configure settings

Here, you’ll configure your primary user ID and password; network connection; locale and timezone; and your hostname (the nickname your computer remembers the IP address as).

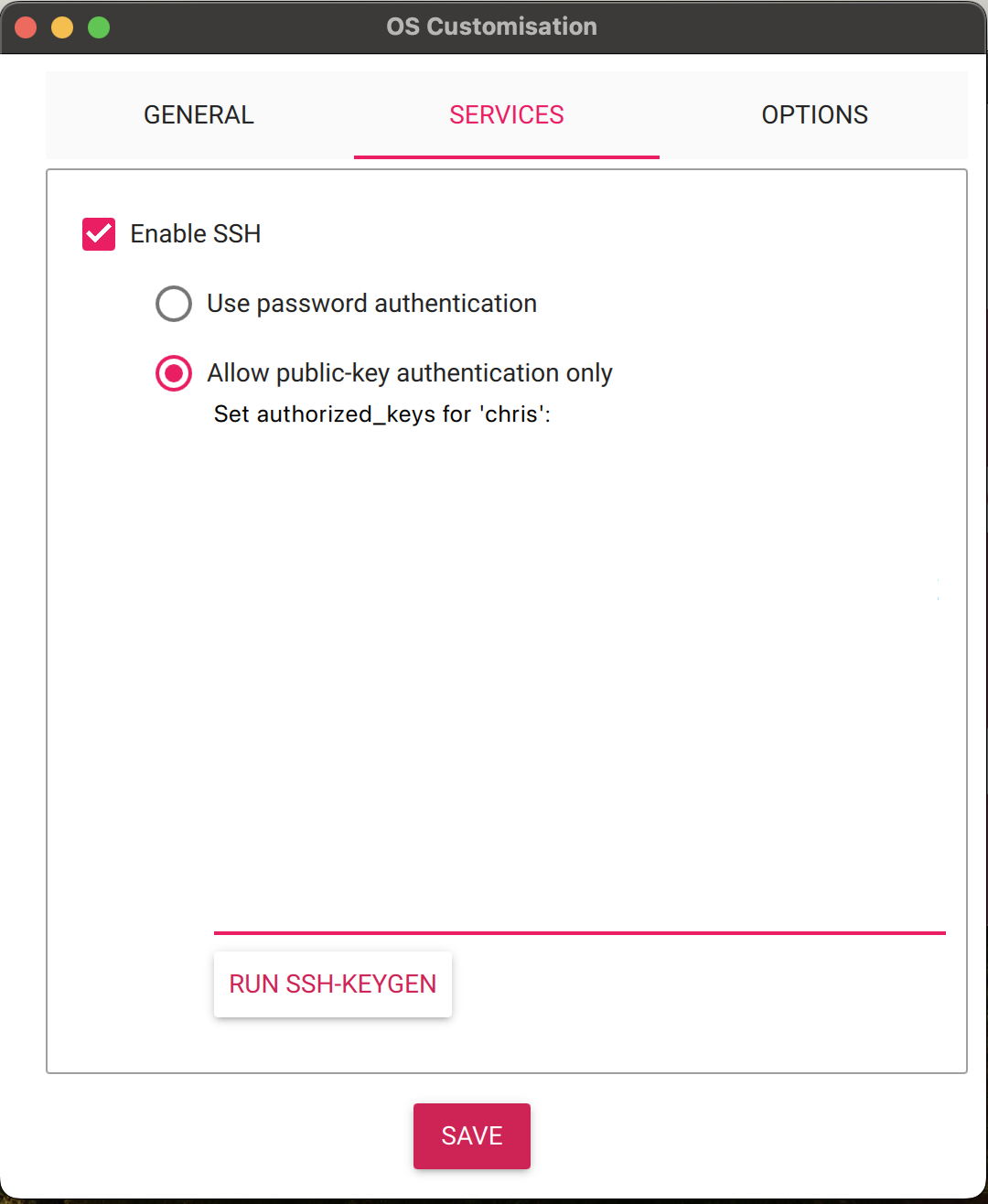

Here, you’ll configure your primary user ID and password; network connection; locale and timezone; and your hostname (the nickname your computer remembers the IP address as). Here, you’ll configure your SSH settings. You should probably use public-key authentication only when dealing with SSH in production, but for learning purposes, you don’t need to at this time. Later in this guide, I’ll walk you through the steps to manually configure SSH, if you are unfamiliar.

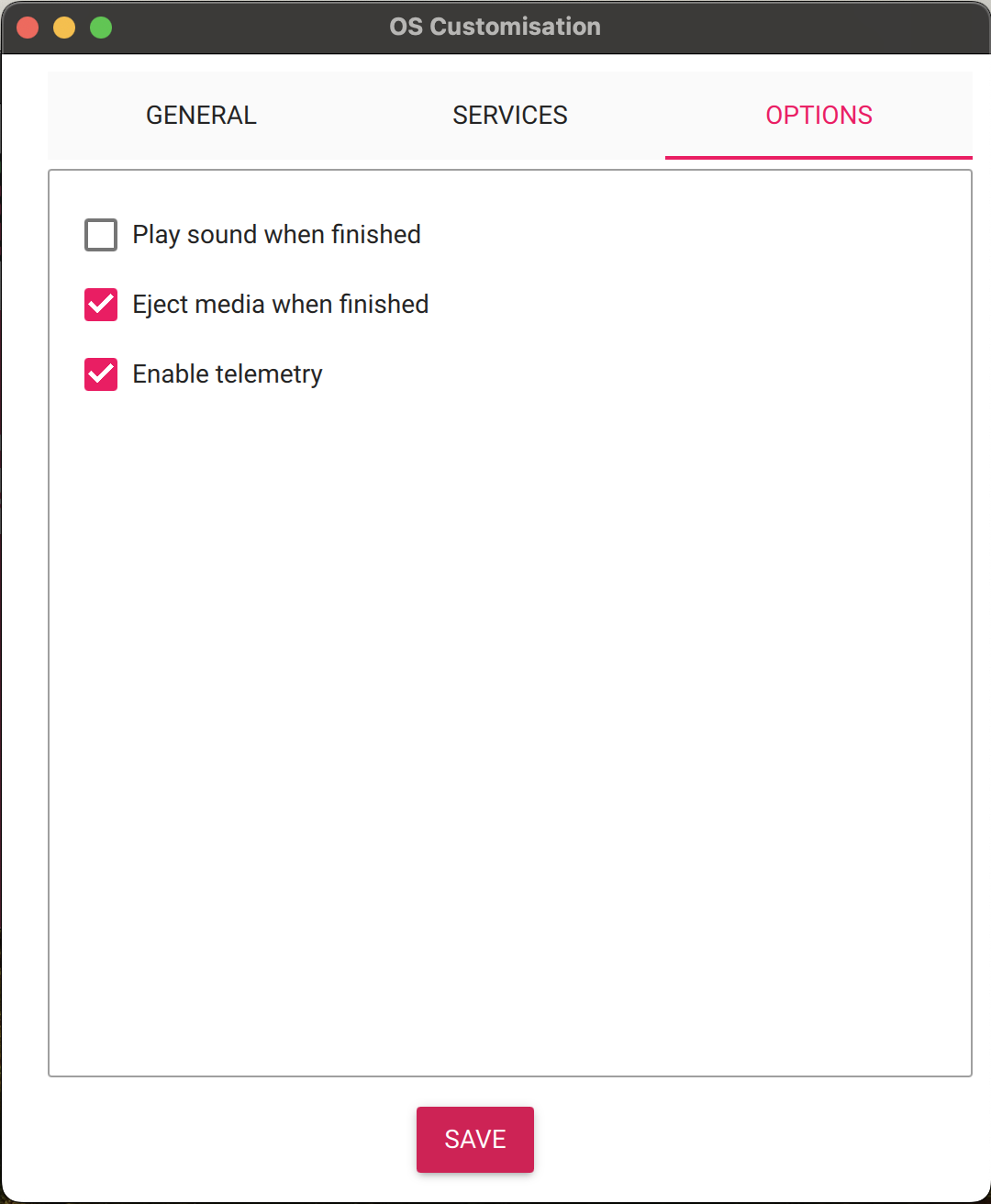

Here, you’ll configure your SSH settings. You should probably use public-key authentication only when dealing with SSH in production, but for learning purposes, you don’t need to at this time. Later in this guide, I’ll walk you through the steps to manually configure SSH, if you are unfamiliar. These are more preference based, but it’s nice to have the storage device automatically eject once the flashing is complete. Then, you just need to unplug it and plug it into your Raspberry Pi to get going.

These are more preference based, but it’s nice to have the storage device automatically eject once the flashing is complete. Then, you just need to unplug it and plug it into your Raspberry Pi to get going.

Getting Started

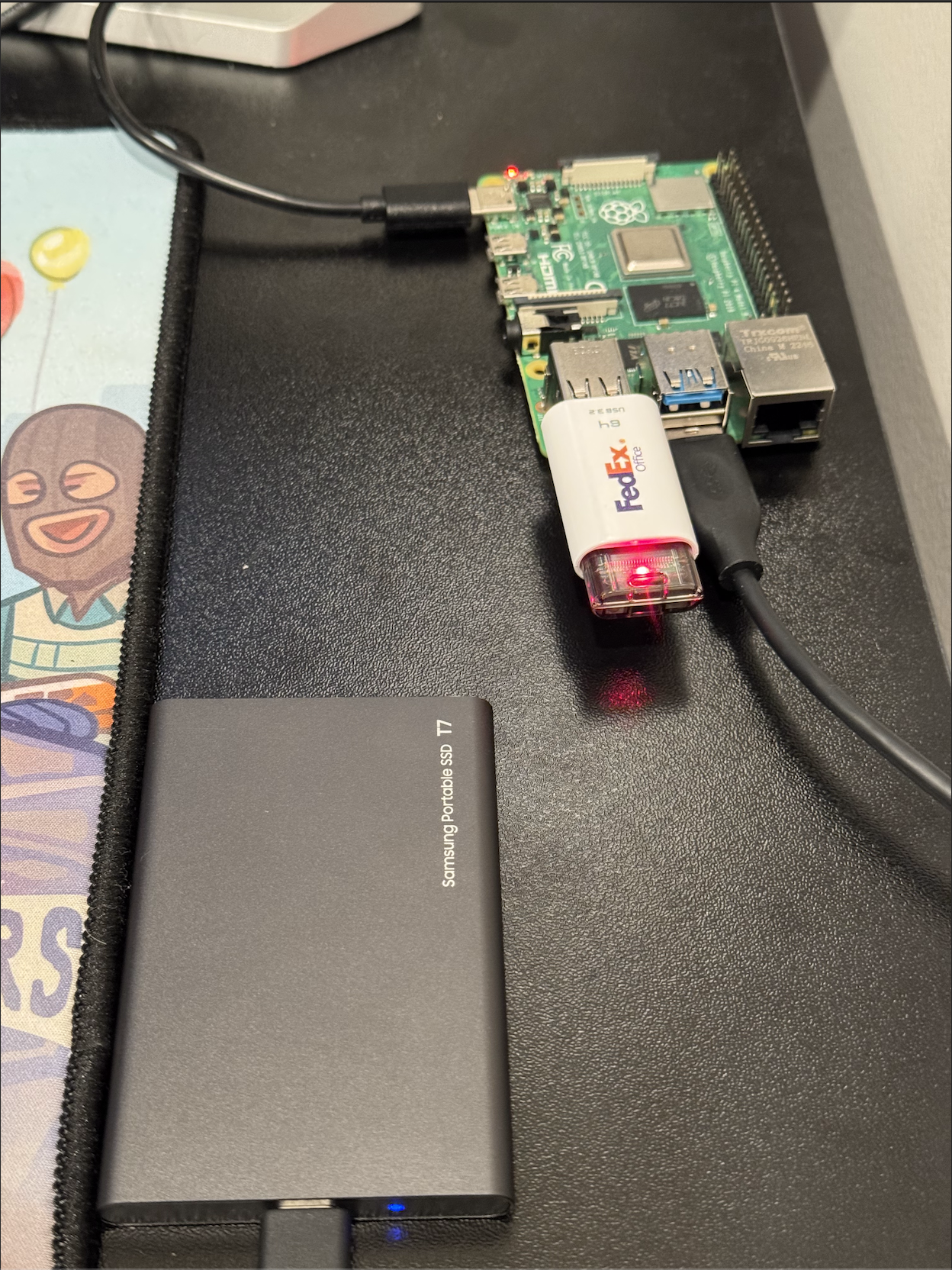

It’s time to set up the actual Raspberry Pi device. For most of this guide, I recommend leaving the Pi outside of the case, because it’ll be easier to plug and unplug some of the devices—the microSD slot is not accessible while the case is on. Later, once we’ve got everything configured and set up as we like, we will attach the fan and case, so it’s a bit safer and able to run in an always-on state. I’ll share a picture of what my server looks like during the early development, and then later I’ll show what it looks like with everything connected and set up.

Now you’re ready to plug your boot media device (the Thumb Drive) into your Raspberry Pi. You should also connect a keyboard, monitor, and power supply. Once all of this is connected, your Raspberry Pi will boot up. Connecting a monitor and keyboard will allow you to directly interact with the system’s terminal. Ideally, you’ll use SSH, but it may be helpful to have direct access in case there are any network issues. Eventually, the SSD will serve as the boot media and primary storage device for the server; however, we can’t modify its partitions while it’s serving as the boot device. So, we’ll use a thumb drive as the boot media device until we complete the partitioning.

When first connecting from the wired keyboard and monitor, let all of the startup processes finish running (these will hopefully have brackets with the word Success in green). If the first boot fails, try to redo the boot again (plug in the power and boot media). If that doesn’t work, try re-flashing the image and restarting the boot process. Then, type in the name of the User ID you wish to login with, in my case it’s chris. Then, enter the password (no characters will show up as you type it in) and hit enter. You’ll see a plaintext message telling you the OS version, some system information (memory usage, temperature, etc.), and you’ll see a line where you can enter commands (the CLI). In my case, it looks like this: chris@ubuntu-pi-server:~$

Now you can run some basic commands to see where you are and what you have available to you. Spoiler alert, you’re in your home directory and have no files. In my case it’s /home/chris, where the /home directory is owned by root and /chris is owned by my user—UID 1000 (the default for new users on a fresh system/image). Right now your directory will be empty, outside of some hidden folders like .ssh. More on this later.

One final note, on why I chose Ubuntu Server LTS over Raspberry Pi’s OS. The reason is pretty simple: Ubuntu is a true open source OS that is widely used personally, professionally, and academically. I wasn’t sure if Raspberry Pi’s OS options would provide a similar level of education. Furthermore, as a complete newbie to server computing, I wanted to use an operating system that I knew had a long history and devoted community, because it would have ample resources for self-led research and troubleshooting.

Next we’ll cover what happened during the boot process, the basic structure of the Linux Server OS, and some important information related to permissions, before we move on to basic networking concepts and configurations.

Linux Server Basics

Key Terms

System Initialization Concepts:

- Initialization: The process of starting up the operating system and bringing it to an operational state.

- Boot Process: The sequence of steps that occur from powering on a computer to loading the operating system.

- BIOS/UEFI: Firmware interfaces that initialize hardware and start the boot process.

- Kernel: The core component of an operating system that manages system resources and hardware.

Systemd Terminology:

- systemd: The init system and system/service manager used by modern Linux distributions.

- Unit: Systemd’s representation of system resources, including services, devices, and mount points.

- Service Unit: Configuration files with .service extension that define how to start, stop, and manage daemons.

- Socket Unit: Configuration files with .socket extension that define communication sockets.

- Timer Unit: Configuration files with .timer extension that trigger actions at specified times.

- Target: A grouping of units that represents a system state (similar to runlevels in older systems).

- Daemon: A background process that runs continuously, providing services.

File System and Directory Terminology:

- FHS (Filesystem Hierarchy Standard): The standard directory structure and contents of Unix-like operating systems.

- Root Directory (

/): The top-level directory in a Linux filesystem hierarchy. - home Directory (

/home): Contains user home directories where personal files are stored. - etc Directory (

/etc): Contains system-wide configuration files. - bin Directory (

/bin): Contains essential command binaries needed for system functionality. - Hidden Files/Directories: Files or directories that begin with a dot (.) and don’t appear in default directory listings.

- Shell Configuration Files: Files like .bashrc and .profile that configure the user’s command-line environment.

User and Permissions Terminology:

- User: An account on a Linux system with specific privileges and access rights.

- Group: A collection of users with shared permissions to files and directories.

- UID (User ID): A numerical identifier assigned to each user on a Linux system.

- GID (Group ID): A numerical identifier assigned to each group on a Linux system.

- Permission: Access rights assigned to users and groups determining what actions they can perform on files and directories.

- chmod: Command used to change file and directory permissions.

- chown: Command used to change file and directory ownership.

- umask: Default permissions applied to newly created files and directories.

- ACL (Access Control List): Extended permissions that provide more granular control than traditional Unix permissions.

- setuid/setgid: Special permissions that allow users to execute files with the permissions of the file owner or group.

- Sticky Bit: A special permission bit that restricts file deletion in shared directories.

First Boot Process

When you first boot a fresh Ubuntu Server LTS image on your Raspberry Pi, several important initialization processes occur that don’t happen during subsequent boots. The first boot of your Ubuntu Server LTS on the Raspberry Pi is fundamentally different from subsequent boots because it performs one-time initialization tasks. While later boots will simply load the configured system, this first boot sets up critical system components.

- Hardware Detection: The system performs comprehensive hardware detection to identify and configure your Raspberry Pi’s components.

- Initial RAM Disk (initrd): The bootloader loads a temporary filesystem into memory that contains essential drivers and modules needed to mount the real root filesystem.

- Filesystem Check and Expansion: On first boot, the system checks the integrity of the filesystem and often expands it to utilize the full available space on your Flash Drive.

- Cloud-Init Processing: Ubuntu Server uses cloud-init to perform first-boot configuration tasks (the processes you see running on the monitor on startup)

- Setting the hostname

- Generating SSH host keys

- Creating the default user account

- Running package updates

- Machine ID Generation: A unique machine ID is generated and stored in /etc/machine-id.

- Network Configuration: The system attempts initial network setup based on detected hardware.

The key difference is that subsequent boots skip these initialization steps since they’ve already been completed, making them significantly faster.

Service Management with systemd

Systemd is the modern initialization and service management system for Linux. It’s responsible for bootstrapping the user space and managing all processes afterward. Key components of systemd include:

- Units: Everything systemd manages is represented as a “unit” with a corresponding configuration file. Units include:

- Service units (

.service): Define how to start, stop, and manage daemons (background processes that are always on) - Socket units (

.socket): Manage network/IPC sockets - Timer units (

.timer): Trigger other units based on timers - Mount units (

.mount): Control filesystem mount points

- Service units (

- Target units: Represent system states (similar to runlevels in older systems)

multi-user.target: Traditional text-mode systemgraphical.target: Graphical user interfacenetwork.target: Network services are ready

For example, let’s take a look at a generic SSH service file:

[Unit]

Description=OpenSSH server daemon

Documentation=man:sshd(8) man:sshd_config(5)

After=network.target auditd.service

Wants=network.target

[Service]

EnvironmentFile=-/etc/default/ssh

ExecStartPre=/usr/sbin/sshd -t

ExecStart=/usr/sbin/sshd -D $SSHD_OPTS

ExecReload=/usr/sbin/sshd -t

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

RestartPreventExitStatus=255

Type=notify

[Install]

WantedBy=multi-user.targetTo break this down:

- [Unit]: Metadata and dependencies

Description: Human-readable service descriptionDocumentation: Where to find documentationAfter: Units that should be started before this oneWants: Soft dependencies

- [Service]: Runtime behavior

ExecStart: Command to start the serviceExecReload: Command to reload the serviceRestart: When to restart the serviceType: How systemd should consider the service started

- [Install]: Installation information

WantedBy: Target that should include this service

Ubuntu Server’s current standard is systemd, but previously it was SysV. A few key improvements include:

- Parallel Service Startup: Systemd can start services in parallel, improving boot times.

- Dependency Management: Systemd handles service dependencies more effectively.

- Service Supervision: Systemd continuously monitors and can automatically restart services.

- Socket Activation: Services can be started on-demand when a connection request arrives.

Managing services is easy using the command line, a crucial component of headless applications. A few examples are:

- View service status:

systemctl status ssh - Start a service:

sudo systemctl start ssh - Stop a service:

sudo systemctl stop ssh - Enable at boot:

sudo systemctl enable ssh - Disable at boot:

sudo systemctl disable ssh - View logs:

journalctl -u ssh

Understanding Your Home Directory

Now that you’ve logged in and can work on your server, you may wonder where you are and what’s there. Running pwd will return the file path of your current location. Running ls -a will show you all available files and directories in your current location. Running these, you’ll see a few things specifically for Shell configuration (your terminal/CLI):

.bash_history: Contains a record of commands you’ve executed in the bash shell. This helps with command recall using the up arrow or history command..bash_logout: Executed when you log out of a bash shell. Often used for cleanup tasks like clearing the screen..bashrc: The primary bash configuration file that’s loaded for interactive non-login shells. It defines aliases, functions, and shell behavior. When you open a terminal window, this file is read..profile: Executed for login shells. It typically sets environment variables and executes commands that should run once per login session, not for each new terminal.

# Sample .bashrc section

# enable color support of ls and also add handy aliases

if [ -x /usr/bin/dircolors ]; then

test -r ~/.dircolors && eval "$(dircolors -b ~/.dircolors)" || eval "$(dircolors -b)"

alias ls='ls --color=auto'

alias grep='grep --color=auto'

alias fgrep='fgrep --color=auto'

alias egrep='egrep --color=auto'

fi

# some more ls aliases

alias ll='ls -alF'

alias la='ls -A'

alias l='ls -CF'Beyond those, you’ll also find hidden application directories:

.cache: Contains non-essential data that can be regenerated as needed. Applications store temporary files here to improve performance on subsequent runs..dotnet: Contains .NET Core SDK and runtime files if you’ve installed the .NET development platform..ssh: Stores SSH configuration files and keys:authorized_keys: Lists public keys that can authenticate to your accountubuntu_pi_ecdsa&ubuntu_pi_ecdsa.pub: Your private and public ECDSA keys (More on this in the SSH Section)known_hosts: Tracks hosts you’ve connected to previouslyssh_config: Optional configuration file for SSH connections

.sudo_as_admin_successful: A marker file created when you successfully use sudo. Its presence suppresses the “sudo capabilities” message when opening a terminal..vscode-server: Created when you connect to your server using Visual Studio Code’s remote development feature. Contains the VS Code server components. (More on this in the Remote Development with VS Code Section).wget-hsts: Wget’s HTTP Strict Transport Security database. Tracks websites that require secure (HTTPS) connections.

The Root Filesystem

The Linux filesystem follows the Filesystem Hierarchy Standard (FHS), which defines the directory structure and contents of Unix-like systems. Here’s a breakdown of key directories:

/bin: Contains essential command binaries (programs) needed for basic system functionality. These commands are available to all users and are required during boot or in single-user mode.- Historical note: Originally separated from /usr/bin because early Unix systems had limited disk space on the root partition.

/boot: Contains boot loader files including the Linux kernel, initial RAM disk (initrd), and bootloader configuration (GRUB).- For Raspberry Pi, this contains the firmware and various boot-related files.

/dev: Contains device files that represent hardware devices. These are not actual files but interfaces to device drivers in the kernel.- Example: /dev/sda represents the first SATA disk.

/etc: Contains system-wide configuration files. The name originated from “et cetera” but is now often interpreted as “Editable Text Configuration.” Critical files include:/etc/fstab: Filesystem mount configuration/etc/passwd: User account information/etc/ssh/sshd_config: SSH server configuration

/home: Contains user home directories where personal files and user-specific configuration files are stored./lib: Contains essential shared libraries needed by programs in /bin and system boot.- On modern 64-bit systems, you’ll also find /lib64 for 64-bit libraries.

/media: Mount point for removable media like USB drives and DVDs./mnt: Temporarily mounted filesystems. This is often used as a manual mount point./opt: Optional application software packages. Used for third-party applications that don’t follow the standard file system layout./proc: A virtual filesystem providing process and kernel information. Files here don’t exist on disk but represent system state.- Example: /proc/cpuinfo shows CPU information.

/root: Home directory for the root user. Separated from /home to ensure it’s available even if /home is on a separate partition./run: Runtime data for processes started since last boot. This is a tmpfs (memory-based) filesystem./sbin: System binaries for system administration tasks, typically only usable by the root user./srv: Data for services provided by the system, such as web or FTP servers./snap: The /snap directory is, by default, where the files and folders from installed snap packages appear on your system./sys: Another virtual filesystem exposing device and driver information from the kernel. Provides a more structured view than /proc./tmp: Temporary files that may be cleared on reboot. Applications should not rely on data here persisting./usr: Contains the majority of user utilities and applications. Originally stood for “Unix System Resources.”/usr/bin: User commands/usr/lib: Libraries for the commands in /usr/bin/usr/local: Locally installed software/usr/share: Architecture-independent data

/var: Variable data files that change during normal operation:/var/log: System log files/var/mail: Mail spool/var/cache: Application cache data/var/spool: Spool for tasks waiting to be processed (print queues, outgoing mail)

The core philosophy behind this structure separates:

- Static vs. variable content

- Shareable vs. non-shareable files

- Essential vs. non-essential components

Understanding this hierarchy helps you navigate any Linux system and locate important files intuitively.

User and Group Permissions

Basics

Linux inherits its permission system from Unix, providing a robust framework for controlling access to files and directories. Understanding this system is essential for maintaining security and proper functionality of your Raspberry Pi server, as well as any Linux-based system. At its core, the Linux permission model operates with three basic permission types applied to three different categories of users:

- Permission Types:

- Read (r): Allows viewing file contents or listing directory contents

- Write (w): Allows modifying file contents or creating/deleting files within a directory

- Execute (x): Allows running a file as a program or accessing files within a directory

- User Categories:

- Owner (u): The user who owns the file or directory

- Group (g): Users who belong to the file’s assigned group

- Others (o): All other users on the system

It’s not only important to know how to set permissions, but also how to view existing ones. When you run ls -l in a directory, you’ll see a detailed listing including permission information:

-rw-r--r-- 1 chris chris 1234 May 6 14:32 example.txtIn this example, the owner can read and write, while group members and others can only read. The first string of characters -rw-r--r-- represents the permissions:

- First character: File type (- for regular file, d for directory, l for symbolic link)

- Characters 2-4: Owner permissions (rw-)

- Characters 5-7: Group permissions (r–)

- Characters 8-10: Others permissions (r–)

chmod

The chmod command modifies file permissions in Linux. You can use it in two ways: symbolic mode or numeric (octal) mode.

Symbolic mode uses letters to represent permission categories (u, g, o, a) and permissions (r, w, x):

# Give the owner execute permission

chmod u+x script.sh

# Remove write permission from group and others

chmod go-w important_file.txt

# Set read and execute for everyone (a=all users)

chmod a=rx application

# Add write permission for owner and group

chmod ug+w shared_document.txtEach symbol has a specific meaning:

u: Owner permissionsg: Group permissionso: Other user permissionsa: All permissions+: Add permissions-: Remove permissions=: Set exact permissions

Octal mode represents permissions as a 3-digit number, where each digit represents the permissions for owner, group, and others:

Read (r)= 4Write (w)= 2Execute (x)= 1

Permissions are calculated by adding these values:

7 (4+2+1)= Read, write, and execute6 (4+2)= Read and write5 (4+1)= Read and execute4 (4)= Read only0= No permissions

# rwxr-xr-x (755): Owner can read, write, execute; group and others can read and execute

chmod 755 script.sh

# rw-r--r-- (644): Owner can read and write; group and others can read only

chmod 644 document.txt

# rwx------ (700): Owner has all permissions; group and others have none

chmod 700 private_directoryBeyond the basic rwx permissions, Linux has three special permission bits:

setuid (4000): When set on an executable file, it runs with the privileges of the file owner instead of the user executing it.setgid (2000): Similar to setuid but for group permissions. When set on a directory, new files created within inherit the directory’s group.sticky bit (1000): When set on a directory, files can only be deleted by their owner, the directory owner, or root (commonly used for /tmp).

chown

The chown command changes the owner and/or group of files and directories. Do not change ownership in the root directories because many require specific ownership/permissions to function properly.

# Change the owner of a file

sudo chown chris file.txt

# Change both owner and group

sudo chown chris:developers project_files

# Change only the group

sudo chown :developers shared_documents

# Change recursively for a directory and all its contents

sudo chown -R chris:chris /home/chris/projectsThe flags do the following:

-R, --recursive: Change ownership recursively-c, --changes: Report only when a change is made-f, --silent: Suppress most error messages-v, --verbose: Output a diagnostic for every file processed

# Verbose recursive ownership change

sudo chown -Rv chris:developers /opt/applicationUnderstanding Permissions

Linux manages permissions through users and groups:

- Each user has a unique User ID (UID)

- Each group has a unique Group ID (GID)

- Users can belong to multiple groups

- The first 1000 UIDs/GIDs are typically reserved for system users/groups

Important files include:

/etc/passwd: Contains basic user account information- Fields: username, password placeholder, UID, primary GID, full name, home directory, login shell

chris:x:1000:1000:Chris Kornaros:/home/chris:/bin/bash/etc/shadow: Contains encrypted passwords and password policy information- Fields: username, encrypted password, days since epoch of last change, min days between changes, max days password valid, warning days, inactive days, expiration date

chris:$6$xyz...hash:19000:0:99999:7:::/etc/group: Contains group definitions- Fields: group name, password placeholder, GID, comma-separated list of members

developers:x:1001:chris,bob,aliceThere are two categories of groups you should understand, Primary and Supplementary:

- Primary Group: Set in /etc/passwd, used as the default group for new files

- Supplementary Groups: Additional groups a user belongs to, defined in /etc/group

You can view your current user’s groups with the groups command, or view them for a specific user with groups chris (replace chris with the name of the user). That being said, directory permissions differ slightly from file permissions:

- Read (r): List directory contents

- Write (w): Create, delete, or rename files within the directory

- Execute (x): Access files within the directory (crucial for navigation)

A common confusion: You may have read permission for a file but not execute permission for its parent directory, preventing access.

The umask (user mask) determines the default permissions for newly created files and directories:

- Default for files: 666 (rw-rw-rw-)

- Default for directories: 777 (rwxrwxrwx)

- The umask is subtracted from these defaults, for example, a umask of 022 results in:

- Files: 644 (rw-r–r–)

- Directories: 755 (rwxr-xr-x)

# View current umask (in octal)

umask

# Set a new umask

umask 027 # More restrictive: owner full access, group read/execute, others no accessTraditional Unix permissions have limitations regarding inheritance: new files don’t inherit permissions from parent directories and changing permissions doesn’t affect existing files. Modern solutions, however, include: the setgid bit on directories for group inheritance and ACLs (Access Control Lists) with default entries that apply to new files. To set up a collaborative directory with proper permissions:

# Create a shared directory for developers

sudo mkdir /opt/projects

sudo chown chris:developers /opt/projects

sudo chmod 2775 /opt/projects # setgid bit ensures new files get 'developers' groupAdvanced Permission Concepts

As I previously wrote, part of the modern permission solutions include ACLs, or Access Control Lists. ACLs extend the traditional permission model to allow specifying permissions for multiple users and groups. When ACLs are in use, ls -l will show a + after the permission bits. Here’s a basic example of how to create and manage an ACL:

# Install ACL support (if not already installed)

sudo apt install acl

# Set an ACL allowing a specific user read access

setfacl -m u:chris:r file.txt

# Set an ACL allowing a specific group write access

setfacl -m g:developers:rw file.txt

# Set default ACLs on a directory (inherited by new files)

setfacl -d -m g:developers:rw directory/

# View ACLs on a file

getfacl file.txt-rw-rw-r--+ 1 chris developers 1234 May 6 14:32 file.txtA few final notes on permissions that are especially relevant for this project, because you’ll be working with external storage devices:

- Not all filesystems support the same permission features:

ext4: Full support for traditional permissions, ACLs, and extended attributesNTFS (via NTFS-3G): Simulated Unix permissions, basic ACL supportFAT32: No native permission support (mounted with fixed permissions)exFAT: No native permission support

- Common Permission Patterns:

- Configuration Files: 644 or 640 (owner can edit, restricted read access)

- Program Binaries: 755 (everyone can execute, only owner can modify)

- Web Content: 644 for regular files, 755 for directories

- SSH Keys: 600 for private keys (owner only), 644 for public keys

- Scripts: 700 or 750 (executable by owner or group)

Computer Networking

This section provides a brief example of how to connect your server to WiFi. It assumes you are already connected using the wireless network settings you configured in the Requirements Section. That being said, I’ll also go over some basic networking concepts and background information. As a result, some of the decisions and terminology in this guide will make more sense (it also helps me remember what I’m doing and why).

Key Terms

Basic Networking Concepts:

- Protocol: A set of rules that determine how data is transmitted between devices on a network. Examples include TCP, UDP, and HTTP.

- MAC Address: Media Access Control address; a unique hardware identifier assigned to network interfaces. It’s a 48-bit address (e.g., 00:1A:2B:3C:4D:5E) permanently assigned to a network adapter.

- IP Address: A numerical label assigned to each device on a network that uses the Internet Protocol. Functions like a postal address for devices.

- Packet: A unit of data transmitted over a network. Includes both the data payload and header information for routing.

- Subnet: A logical subdivision of an IP network that allows for more efficient routing and security segmentation.

- Gateway: A network node that serves as an access point to another network, typically connecting a local network to the wider internet.

- DNS: Domain Name System; translates human-readable domain names (like google.com) into IP addresses computers can understand.

- DHCP: Dynamic Host Configuration Protocol; automatically assigns IP addresses and other network configuration parameters to devices.

- CIDR Notation: Classless Inter-Domain Routing; a method for representing IP addresses and their subnet masks in a compact format (e.g., 192.168.1.0/24). The number after the slash indicates how many bits are used for the network portion of the address.

- OSI Model: Open Systems Interconnection model; a conceptual framework that standardizes the functions of a communication system into seven abstraction layers, from physical transmission to application interfaces.

- TCP/IP Model: Transmission Control Protocol/Internet Protocol model; a four-layer practical implementation of network communications used as the foundation of the internet, simplifying the OSI model for real-world application.

Network Types and Components:

- LAN: Local Area Network; a network confined to a small geographic area, like a home or office.

- WAN: Wide Area Network; connects multiple LANs across large geographic distances.

- Router: A device that forwards data packets between computer networks, determining the best path for data transmission.

- Switch: A networking device that connects devices within a single network and uses MAC addresses to forward data to the correct destination.

- Bandwidth: The maximum data transfer rate of a network connection, measured in bits per second (bps).

- Latency: The delay between sending and receiving data, typically measured in milliseconds.

Linux Networking Terminology:

- Interface: A connection between a device and a network. In Linux, these have names like eth0 (Ethernet) or wlan0 (wireless).

- Netplan: Ubuntu’s default network configuration tool that uses YAML files to define network settings.

- systemd-networkd: A system daemon that manages network configurations in modern Linux distributions.

- NetworkManager: An alternative network management daemon that provides detection and configuration for automatic network connectivity.

- Socket: An endpoint for sending or receiving data across a network, defined by an IP address and port number.

ip: A powerful, modern Linux networking utility that replaces older commands likeifconfig,route, andarp. Used to show/manipulate routing, devices, policy routing, and tunnels.

Security Concepts:

- Firewall: Software or hardware that monitors and filters incoming and outgoing network traffic based on predetermined security rules.

- fail2ban: An intrusion prevention software that protects servers from brute-force attacks by monitoring log files and temporarily banning IP addresses that show malicious behavior.

- ufw: Uncomplicated Firewall; a user-friendly interface for managing iptables firewall rules in Linux, designed to simplify the process of configuring a firewall.

- SSH: Secure Shell; a cryptographic network protocol for secure data communication and remote command execution.

- Encryption: The process of encoding information to prevent unauthorized access.

- Port: A virtual point where network connections start and end. Ports are identified by numbers (0-65535).

- NAT: Network Address Translation; allows multiple devices on a local network to share a single public IP address.

- VPN: Virtual Private Network; extends a private network across a public network, enabling secure data transmission.

Computer Networking

Simply put, a computer network is a collection of interconnected devices that can communicate with each other using a set of rules called protocols. Networking allows devices to share resources, exchange data, and collaborate on tasks. On a deeper level, it helps to understand the conceptual models that describe how data moves through a network. Before we dive in, let’s go over some basic terminology.

The OSI Model

Now that you understand some common terms and concepts, we can dive into the conceptual models. The Open Systems Interconnection (OSI) Model divides networking into seven layers, each handling specific aspects of network communication.

- Physical Layer: Physical medium, electrical signals, cables, and hardware

- Data Link Layer: Physical addressing (MAC addresses), error detection

- Network Layer: Logical addressing (IP addresses), routing

- Transport Layer: End-to-end connections, reliability (TCP/UDP)

- Session Layer: Session establishment, management, and termination

- Presentation Layer: Data translation, encryption, compression

- Application Layer: User interfaces and services (HTTP, SMTP, etc.)

The TCP/IP Model

The OSI Model is conceptual, but the TCP/IP Model is more practical and has four layers.

- Network Access Layer: Combines OSI’s Physical and Data Link layers

- Internet Layer: Similar to OSI’s Network layer (IP)

- Transport Layer: Same as OSI’s Transport layer (TCP/UDP)

- Application Layer: Combines OSI’s Session, Presentation, and Application layers

Understanding these network models isn’t just theoretical—it provides a systematic approach to troubleshooting. When connection issues arise, you can methodically check each layer: Is the physical connection working? Is IP addressing correct? Is the transport protocol functioning? This layered approach helps isolate and resolve problems efficiently.

Network Protocols

Remember, a protocol is a set of rules that determine how data is transmitted between devices on a network. You can think of protocols in one of two camps, Connection-Oriented and Connectionless. Within these camps, two protocols stand out as the backbone of the internet’s data transfers: TCP and UDP.

TCP (Transmission Control Protocol) is a connection-oriented protocol that establishes a dedicated end-to-end connection before transmitting data. TCP is used when reliability is more important than speed (e.g., web browsing, email, file transfers). It has four defining traits:

- Reliability: Guarantees delivery of packets in the correct order

- Flow Control: Prevents overwhelming receivers with too much data

- Error Detection: Identifies and retransmits lost or corrupted packets

- Handshake Process: Three-way handshake establishes connections

Common TCP Applications: Web browsing (HTTP/HTTPS), email (SMTP, IMAP), file transfers (FTP, SCP), and secure shell (SSH)

UDP (User Datagram Protocol) is a connectionless protocol that sends data without establishing a dedicated connection. UDP is used for real-time applications (e.g., video streaming, VoIP, online gaming). It also has four defining traits:

- Simplicity: No connection setup or maintenance overhead

- Speed: Faster than TCP due to fewer checks and guarantees

- Lower Reliability: No guarantee of delivery or correct ordering

- Efficiency: Better for real-time applications where occasional data loss is acceptable

Common UDP Applications: DNS lookups, DHCP address assignment, streaming video, VoIP calls, and online gaming

Beyond those, there are some other important protocols to know, because they provide the foundation for most of the user-friendly features we are used to today.

- IP (Internet Protocol)

- IP handles addressing and routing of packets across networks. There are two versions in common use:

- IPv4: 32-bit addresses (e.g., 192.168.1.1)

- IPv6: 128-bit addresses (e.g., 2001:0db8:85a3:0000:0000:8a2e:0370:7334)

- ICMP (Internet Control Message Protocol)

- ICMP helps diagnose network issues by sending error messages and operational information. The ping command uses ICMP to test connectivity.

- HTTP/HTTPS (Hypertext Transfer Protocol)

- HTTP and its secure variant HTTPS are application-layer protocols used for web browsing.

- DNS (Domain Name System)

- DNS translates human-readable domain names (like google.com) into IP addresses.

Many standard protocols have secure variants that add encryption: HTTP becomes HTTPS via TLS/SSL, telnet is replaced by SSH, and FTP gives way to SFTP or FTPS. These secure protocols wrap the original protocol’s data in encryption layers, protecting sensitive information from interception or tampering.

Network Connections

There are two ways for systems to connect to the internet: wired and wireless.

Wired Connections

Ethernet is the most common wired networking technology. Its name comes from the term ether referring to a theoretical medium that was believed to carry light waves through space. It was developed by Robert Metcalf and David Boggs at Xerox’s PARC facility in the 1970s. The goal was to provide a more stable LAN which could facilitate high-speed transfers between computers and laser printers. They succeeded, and had improved on a precursor’s, ALOHAnet, design by creating a system that could detect collisions—when two devices try to transmit at the same time. Here are some key traits:

- Reliability: Less susceptible to interference

- Speed: Typically faster and more stable than wireless

- Security: Harder to intercept without physical access

- Connectors: RJ45 connectors on Ethernet cables

- Standards: 10/100/1000 Mbps (Gigabit) are common speeds

| Cable Type | Max Speed | Max Distance | Notes |

|---|---|---|---|

| Cat 5e | 1 Gbps | 100 meters | Minimum for modern networks |

| Cat 6 | 10 Gbps | 55 meters | Better crosstalk protection |

| Cat 6a | 10 Gbps | 100 meters | Improved shielding |

| Cat 7/8 | 40+ Gbps | 100 meters | Fully shielded, enterprise use |

For most Raspberry Pi projects, Cat 5e or Cat 6 cables are more than sufficient.

Wireless Connections

Wi-Fi allows devices to connect to networks without physical cables. Its name is not short for Wireless Fidelity, but actually a marketing choice by the brand-consulting firm Interbrand. They chose the name because it sounded similar to Hi-Fi. Wi-Fi was developed by numerous researchers and engineers, but the key breakthrough was by Dr. John O’Sullivan from CSIRO in Australia. His work focused on a wireless LAN, which would eventually become the IEEE (Institute of Electrical and Electronics Engineers) 802.11 standard in 1997. Eventually, Apple would help with widespread adoption by including the AirPort feature on its laptops, enabling Wi-Fi connectivity out of the box. Here are some key traits:

- Convenience: No cables required, more flexible placement

- Standards: 802.11a/b/g/n/ac/ax (Wi-Fi 6) with varying speeds and ranges

- Security: WEP, WPA, WPA2, and WPA3 encryption standards (WPA2/WPA3 recommended)

Network Interface Names in Linux

In Ubuntu Server, network interfaces follow a predictable naming convention:

- eth0, eth1: Traditional Ethernet interface names

- enp2s0, wlp3s0: Modern predictable interface names (based on device location)

- Ubuntu moved to predictable interface naming to solve a critical problem:

- In traditional naming (eth0, eth1), names could change unexpectedly after hardware changes or reboots.

- Modern names like

enp2s0encode the physical location of the network card (PCI bus 2, slot 0), ensuring the same interface always gets the same name regardless of detection order.

- Ubuntu moved to predictable interface naming to solve a critical problem:

- wlan0, wlan1: Traditional wireless interface names

IP Addressing

An IP (Internet Protocol) Address is a unique identifier for a device on the internet, or a LAN. There are two different kinds of addresses: IPv4 and IPv6.

IPv4 uses 32-bit addresses, providing approximately 4.3 billion unique addresses (now largely exhausted):

- Format: Four octets (numbers 0-255) separated by dots (e.g., 192.168.1.1)

- Classes: Traditionally divided into classes A, B, C, D, and E

- Private Ranges:

- 10.0.0.0 to 10.255.255.255 (10.0.0.0/8)

- 172.16.0.0 to 172.31.255.255 (172.16.0.0/12)

- 192.168.0.0 to 192.168.255.255 (192.168.0.0/16)

- Subnet Masks: Used to divide networks (e.g., 255.255.255.0 or /24)

- Issues: IPv4 address exhaustion due to limited capacity

IPv6 uses 128-bit addresses, providing approximately 3.4×10^38 unique addresses:

- Format: Eight groups of four hexadecimal digits separated by colons (e.g., 2001:0db8:85a3:0000:0000:8a2e:0370:7334)

- Shorthand: Leading zeros in a group can be omitted, and consecutive groups of zeros can be replaced with :: (only once)

- Example: 2001:db8:85a3::8a2e:370:7334

- Address Types:

- Unicast: Single interface

- Anycast: Multiple interfaces (closest responds)

- Multicast: Multiple interfaces (all respond)

- Benefits: More addresses, improved security, simplified headers, no need for NAT

One final note, CIDR (Classless Inter-Domain Routing) notation represents IP addresses and their associated routing prefix:

- Format: IP address followed by “/” and prefix length (e.g., 192.168.1.0/24)

- Calculation: A prefix of /24 means the first 24 bits are the network portion, leaving 8 bits for hosts (allowing 2^8 = 256 addresses)

For a network using CIDR notation 192.168.1.0/24:

- The /24 means the first 24 bits (3 octets) identify the network

- This leaves 8 bits for hosts (2^8 = 256 potential addresses)

- Subtract 2 for network address (.0) and broadcast address (.255)

- Result: 254 usable IP addresses (192.168.1.1 through 192.168.1.254)

Ubuntu Server Networking Tools

Now that we’ve covered the basic concepts, it’s time to dive into the actual commands and tools that will let you configure and manage your server’s network. To start, you can view network interfaces and their statuses using the command ip link show, or ip addr show for your IP Address configuration. You can view only the IPv4 or IPv6 addresses using ip -4 addr or ip -6 addr, respectively.

Testing Connectivity

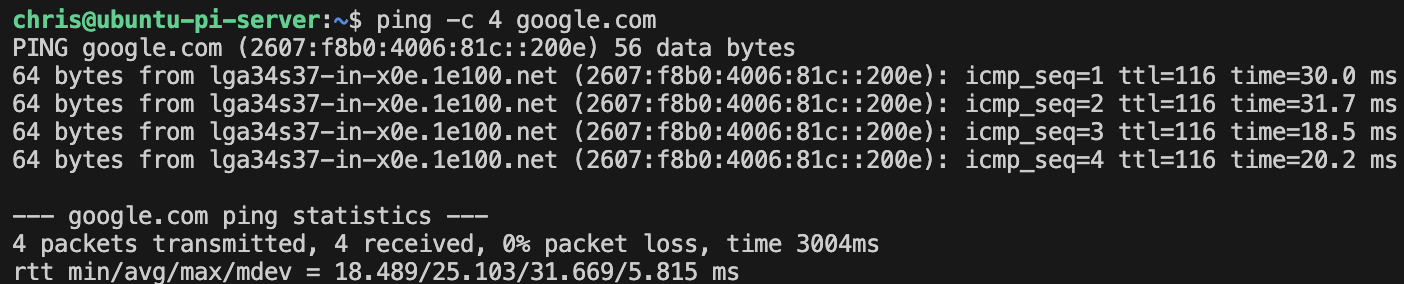

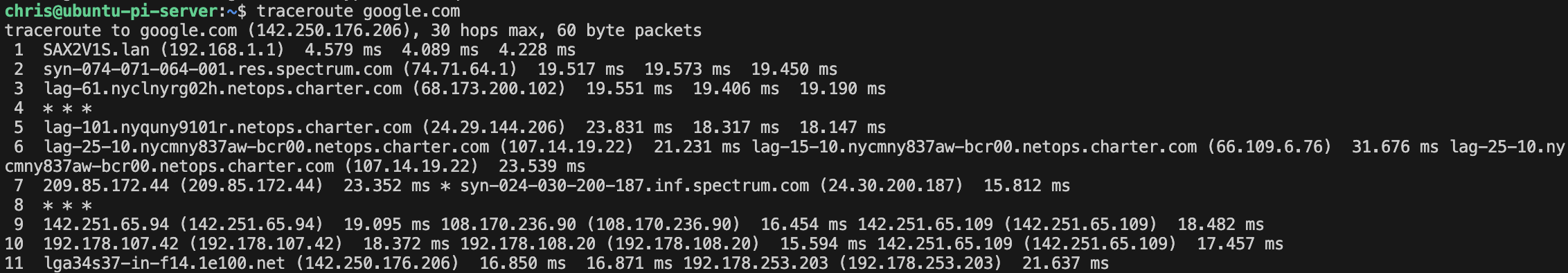

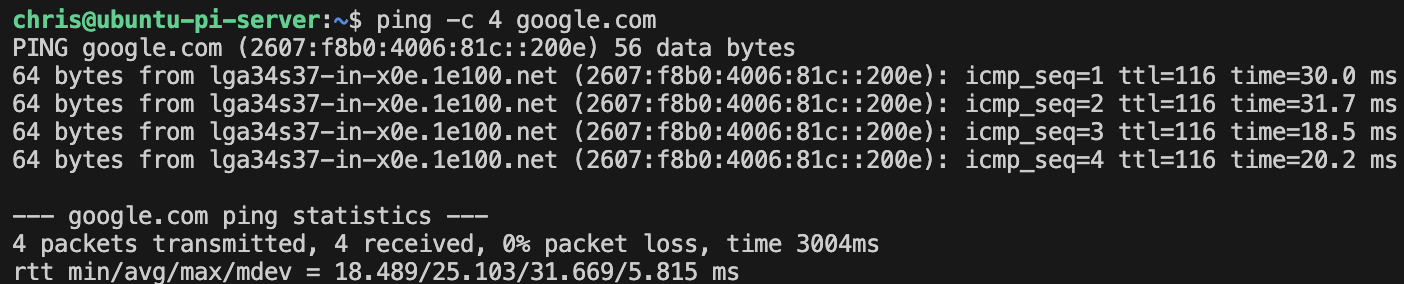

Although it seems redundant if you already viewed your IP addresses, you can also test connectivity using the ping and traceroute commands. These will be more useful for checking your server’s network status from your desktop or laptop.

Test basic connectivity to a host:

ping -c 4 google.com

Trace the route to a destination:

# First update and install your packages

sudo apt update && sudo apt upgrade -y

# Install traceroute

sudo apt install traceroute -y

# Run traceroute

traceroute google.com

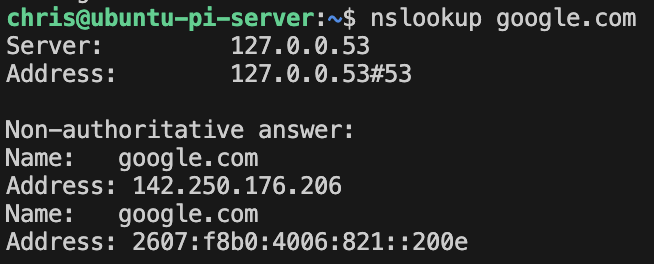

Check the DNS resolution:

nslookup google.com

# dig google.com

Viewing Network Statistics

You can view more specific network information with the ss command. This command’s name is an acronym for socket statistics and is used as a replacement for the older netstat plan because it offers faster performance and a more detailed output. Additionally, you can filter by specific protocol.

ss -tulnThe tuln flag is made up of four separate flags:

-t, displays only TCP sockets-u, displays only UDP sockets-l, displays listening sockets-n, displays addresses numerically, instead of resolving them

Configuration Files

Finally, there are a few crucial configuration files that will handle the bulk of your networking. In Ubuntu Server, network interfaces and DNS configurations are configured and stored in the /etc/ directory.

Network Interfaces:

/etc/netplan/:Contains YAML configuration files for Netplan/etc/network/interfaces:Configuration method (if NetworkManager is used)

DNS Configuration:

- /etc/resolv.conf: DNS resolver configuration

- /etc/hosts: Static hostname to IP mappings

- /etc/hostname: System hostname

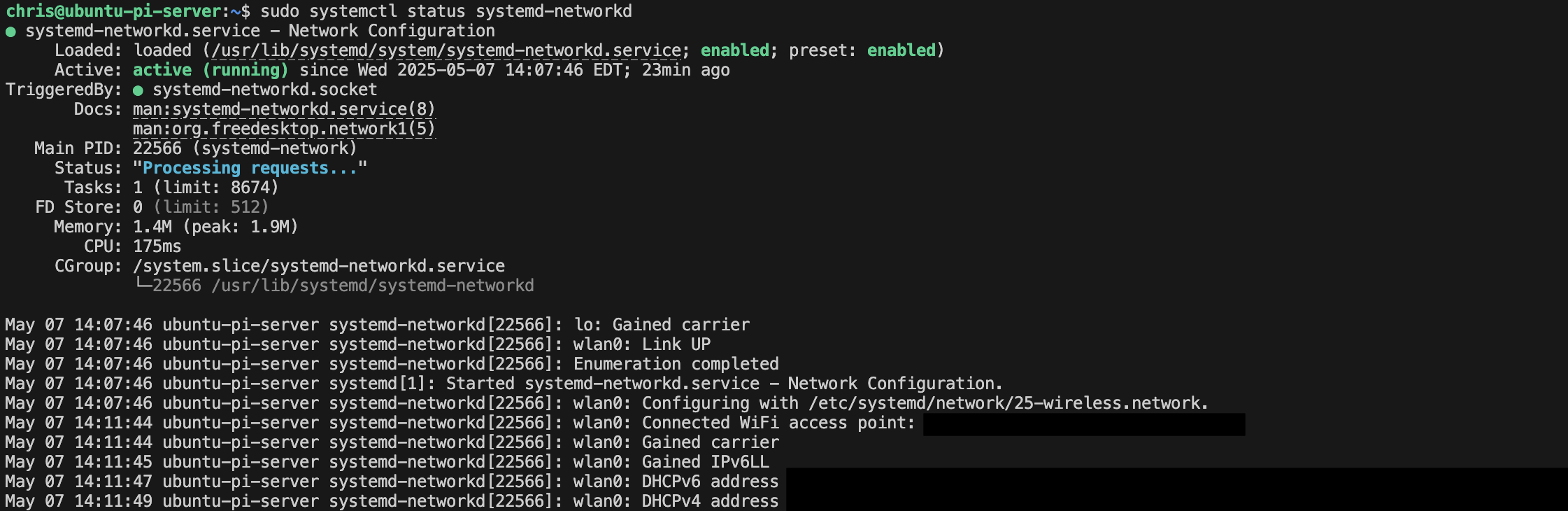

systemd-networkd

systemd-networkd is a system daemon that manages network configurations in modern Linux distributions. It’s part of the systemd suite and provides network configuration capabilities through simple configuration files.

It generally works using three key components:

- Configuration Files: You define network settings in .network files located in /etc/systemd/network/

- Service Management: systemd-networkd runs as a system service to apply and maintain network configurations

- Integration: Works closely with other systemd components for DNS resolution and networking

Basic Wired Configuration

systemd-networkd uses configuration files with .network extension. Each file consists of sections with key-value pairs. A basic configuration for a static IP would look like this:

# /etc/systemd/network/20-wired.network

[Match]

Name=eth0

[Network]

Address=192.168.1.100/24

Gateway=192.168.1.1

DNS=8.8.8.8

DNS=8.8.4.4Let’s walk through the configuration file’s structure:

- File Naming Convention:

- The file is named 20-wired.network.

- The number prefix (20-) determines the processing order (lower numbers processed first), allowing you to create prioritized configurations.

- The suffix .network tells systemd-networkd that this is a network interface configuration file.

- [Match] Section:

- This critical section determines which network interfaces the configuration applies to.

Name=eth0: This specifies that the configuration should apply to the eth0 interface only.- You can use wildcards (e.g., eth* would match all Ethernet interfaces) or match by other properties such as MAC address using MACAddress=xx:xx:xx:xx:xx:xx.

- Behind the scenes:

- systemd-networkd scans all available network interfaces.

- Compares their properties against those specified in the [Match] section.

- If all properties match, the configuration is applied to that interface.

- [Network] Section:

- This section defines the network configuration parameters.

Address=192.168.1.100/24: Sets a static IPv4 address with CIDR notation. The /24 represents the subnet mask (equivalent to 255.255.255.0) and defines the network boundary.Gateway=192.168.1.1: Specifies the default gateway for routing traffic outside the local network. All traffic not destined for the local subnet (192.168.1.0/24) will be sent to this IP address.DNS=8.8.8.8andDNS=8.8.4.4: These are Google’s public DNS servers. When specified, systemd-networkd will automatically configure /etc/resolv.conf through systemd-resolved. You can specify multiple DNS servers, and they will be tried in order.

- Behind the scenes:

- systemd-networkd identifies the eth0 interface

- Assigns the static IP address using kernel netlink sockets

- Sets up the routing table to use the specified gateway

- Communicates with systemd-resolved to configure DNS settings

- Maintains this configuration and reapplies it if the interface goes down and back up

This configuration example works well for server environments where static, predictable networking is preferable. This is a declarative configuration, it describes the desired state, rather than the steps to achieve it, so repeated application produces the same result.

DHCP with a Wired Connection

If you want to add DHCP, you can use the following:

# /etc/systemd/network/20-wired.network

[Match]

Name=eth0

[Network]

DHCP=yesLet’s walk through the differences between a dynamic and static host configuration file structure:

DHCP=yes: This single line replaces all the static configuration parameters from the previous example.- It instructs systemd-networkd to obtain IP address, subnet mask, gateway, DNS servers, and other network parameters automatically from a DHCP server.

- You can also use

DHCP=ipv4to enable only IPv4 DHCP, orDHCP=ipv6for only IPv6 DHCP, orDHCP=yesfor both.

- Behind the scenes:

- systemd-networkd identifies the eth0 interface

- Initiates the DHCP client process, which follows the DHCP protocol’s Discover-Offer-Request-Acknowledge (DORA) sequence:

- The client broadcasts a DISCOVER message

- Available DHCP servers respond with OFFER messages

- The client selects an offer and sends a REQUEST

- The selected server sends an ACKNOWLEDGE

- Applies all the received network parameters (IP, subnet, gateway, DNS)

- Sets up a lease timer to manage when the configuration needs renewal

- Handles DHCP lease renewals automatically

- Advantages:

- Simplified configuration maintenance - no need to update parameters when network details change

- Works well in networks where IP assignments are centrally managed

- Automatically adapts to network changes

This configuration works well for environments where network parameters are dynamic or managed by a network admin through DHCP.

Wireless Configurations and wpa_supplicant

While wired connections are a basic part of networking, wireless connections require some extra work. More specifically, with systemd-networkd, you’ll need a tool like WPA. Wi-Fi Protected Access (WPA) emerged as a response to weaknesses in the original Wired Equivalent Privacy (WEP) security protocol. As wireless networks became ubiquitous, secure authentication and encryption mechanisms became essential. The Linux ecosystem offers several powerful tools for managing these connections:

wpa_supplicant: The core daemon that handles wireless connectionswpa_cli: A command-line interface for controlling wpa_supplicant dynamicallywpa_passphrase: A utility for generating secure password hashes

On the systemd-networkd side of things, the configuration is simple, broken down in detail below.

# /etc/systemd/network/25-wireless.network

[Match]

Name=wlan0

[Network]

DHCP=yes- Wireless Interface:

- The configuration targets wlan0, which is the traditional name for the first wireless network interface in Linux.

- Minimal Configuration:

- The file only has the information needed by systemd-networkd to manage the IP addressing aspect of the wireless connection. Note what’s missing: there’s no SSID, password, or security protocol information. This is because:

- systemd-networkd isn’t designed to handle wireless authentication and association

- This separation of concerns is intentional in the systemd design philosophy - specialized tools should handle specialized tasks

- Integration with wpa_supplicant:

wpa_supplicantis the standard Linux utility for managing wireless connectionssystemd-networkdhandles the network layer (Layer 3) configuration once wpa_supplicant establishes the data link layer (Layer 2) connection- This division follows the OSI model’s separation of network layers

- Behind the scenes:

- wpa_supplicant handles wireless scanning, authentication, and association

- Once a wireless link is established, it notifies the system

- systemd-networkd detects the active interface that matches wlan0

- It then initiates the DHCP client process to configure the network parameters

- This separation provides flexibility and security

- The wireless security operations are handled by a dedicated, well-tested component

- Networking remains under systemd-networkd’s control for consistency with other interfaces

While the systemd-networkd configuration is straightforward, things get more complicated with WPA. In standard wpa_supplicant configuration files, wireless passwords are often stored in plaintext. This creates a security vulnerability - anyone with access to the configuration file can view the password.

# /etc/wpa_supplicant/wpa_supplicant-wlan0.conf

ctrl_interface=/run/wpa_supplicant

update_config=1

network={

ssid="YourNetworkSSID"

psk="YourWiFiPassword"

}The wpa_passphrase tool solves this problem by generating a pre-computed hash of the password. Running this is straightforward as the basic syntax is wpa_passphrase [SSID] [passphrase]. Then, WPA outputs a hashed version of your password.

# Generate a hashed passphrase

wpa_passphrase "MyHomeNetwork" "MySecurePassword123"To then use the hashed password in your configuration, you can run the following command, just make sure to remove the line with the plaintext password from the config file after running it:

# Generate the hash and save directly to the configuration file

wpa_passphrase "MyHomeNetwork" "MySecurePassword123" | sudo tee -a /etc/wpa_supplicant/wpa_supplicant-wlan0.confWhen you use wpa_passphrase:

- It combines the SSID and password using the PBKDF2 (Password-Based Key Derivation Function 2) algorithm

- It applies 4096 iterations of HMAC-SHA1 for key strengthening

- The result is a 256-bit (32-byte) hash represented in hexadecimal format

- This hash is what’s actually used for the authentication process, not the original password

This approach makes it virtually impossible to reverse-engineer the original password from the hash.

# /etc/wpa_supplicant/wpa_supplicant-wlan0.conf

ctrl_interface=/run/wpa_supplicant

update_config=1

network={

ssid="MyHomeNetwork"

#psk="MySecurePassword123"

psk=a8e665b82929d810746c5a1208c472f9d2a25db67a6bc32a99fa4158aea02175

}Now that you have an idea about the basic structure of this file, let’s go over some key points:

- File Naming Convention:

- The file wpa_supplicant-wlan0.conf is specifically named to associate with the wlan0 interface.

- This naming allows different wireless interfaces to have different configurations.

- Configuration Directives:

ctrl_interface=/run/wpa_supplicant: This specifies the control interface path, which is a socket that allows programs to communicate with wpa_supplicant. This enables tools like wpa_cli to connect and control wpa_supplicant dynamically.update_config=1: Allows wpa_supplicant to update the configuration file automatically, useful when network details change or when using wpa_cli to add networks interactively.

- Network Block:

- The

network={}block defines a single wireless network configuration. ssid="YourNetworkSSID": The Service Set Identifier - the name of the wireless network to connect to.psk="YourWiFiPassword": The Pre-Shared Key - the password for the wireless network in plaintext.

- The

- Security Considerations:

- When you enter the password in plaintext as shown, wpa_supplicant will automatically convert it to a hash during processing.

- For better security, you can pre-hash the password using: wpa_passphrase “YourNetworkSSID” “YourWiFiPassword” and use the generated hash.

- The configuration file should have restricted permissions (600) to prevent other users from reading the passwords.

- Behind the scenes:

- wpa_supplicant reads this configuration at startup

- It scans for available wireless networks

- When it finds the specified SSID, it attempts to authenticate using the provided credentials

- It handles all the wireless protocol handshakes, including:

- Authentication and association with the access point

- Negotiation of encryption parameters

- Establishment of the encrypted channel

Once connected, it maintains the connection and handles roaming between access points with the same SSID. This configuration represents the minimum needed for a WPA/WPA2 Personal network connection. For more complex scenarios like enterprise authentication (WPA-EAP), additional parameters would be needed in the network block.

While the wpa_supplicant configuration files provide static configuration that saves when you write out, wpa_cli offers interactive, dynamic control over wireless connections. First ensure wpa_supplicant is running with a control interface by using ps aux | grep wpa_supplicant. If it’s running with the -c flag pointing to a config file that contains the ctrl_interface=/run/wpa_supplicant line, you can connect to it.

As a heads up, because we already created the wlan0 configuration file manually, the following steps are just for your knowledge. You’ll probably get some messages saying FAIL if you try to run some of the commands, but I think it’s good to learn them anyway—even though they aren’t necessarily important right now.

First, start the interactive mode with sudo wpa_cli, or specify the interface with sudo wpa_cli -i wlan0. Let’s go over some essential commands:

# Show help

help

# List all available commands

help all

# List available networks

scan

scan_results

# Show current status

status

# List configured networks

list_networks

# Add a new network

add_networkStep-By-Step: Adding a Network

> add_network

0

> set_network 0 ssid "MyNetwork"

OK

> set_network 0 psk "MyPassword"

OK

> set_network 0 priority 5

OK

> enable_network 0

OK

> save_config

OK

# For networks with hashed passwords

> add_network

0

> set_network 1 ssid "MyNetwork"

OK

> set_network 1 psk 0a1b2c3d4e5f6g7h8i9j0k1l2m3n4o5p6q7r8s9t0u1v2w3x4y5z

OK

> set_network 1 priority 10

OK

> enable_network 1

OK

> save_config

OKIt’s good to know that higher priority values (like 10) are preferred over lower ones (like 5). Now, you can also use wpa by running one-off commands or writing scripts with non-interactive mode. Additionally, you should know that when you first boot up your Raspberry Pi, the internet will be managed by Netplan (more on that later in this section). So, if you try to use wpa_cli save_config after creating the config files, that will return FAIL. Instead, once you write the files in the proper directories, run the reconfigure command.

# Scan for networks

sudo wpa_cli scan

sudo wpa_cli scan_results

# Save the current configuration

sudo wpa_cli save_config

# Reconnect to the network

sudo wpa_cli reconfigureFinally, you can monitor signal quality and connection status by using the signal_poll command. The RSSI (Received Signal Strength Indicator) shows connection quality in dBm. Values closer to 0 indicate stronger signals. Additionally, you can debug connection issues using status.

> signal_poll

RSSI=-67

LINKSPEED=65

NOISE=9999

FREQUENCY=5220

> status

bssid=00:11:22:33:44:55

freq=5220

ssid=MyNetwork

id=0

mode=station

pairwise_cipher=CCMP

group_cipher=CCMP

key_mgmt=WPA2-PSK

wpa_state=COMPLETED

ip_address=192.168.1.100Now that we’ve covered a lot of the great features available with wpa_cli, it’s time to continue configuring our server. You may remember me mentioning that the Raspberry Pi default networking tool is Netplan. Before we can enable and start the wlan0 service (meaning your primary wifi is on), we need to safely shut down Netplan.

Converting Netplan to networkd

It’s no surprise that Raspberry Pi uses Netplan as the default network manager because it provides a consistent interface for network configuration; however, there are several reasons you might want to use systemd-networkd directly:

- Simplicity: Direct systemd-networkd configuration eliminates a layer of abstraction

- Control: Direct access to all of systemd-networkd’s features without Netplan’s limitations

- Integration: Better alignment with other systemd components

- Learning: Understanding the underlying network configuration system

- Performance: Potentially faster setup without the translation layer

Ubuntu Server uses a layered approach to network configuration:

- User configuration layer: YAML files in /etc/netplan/

- Translation layer: Netplan reads YAML files and generates configurations for a backend

- Backend layer: Either systemd-networkd or NetworkManager applies the actual configuration

By removing the middle layer (Netplan), we’re configuring the backend directly. As you can see from the previous parts of this Networking section in the guide, I like the learning value and long-term potential of systemd, which is why I went with it over Netplan.

Step-by-step Migration

- Begin by creating backups of your current network configuration

# Create a backup directory

sudo mkdir -p /etc/netplan/backups

# Copy all netplan config files

sudo cp /etc/netplan/*.yaml /etc/netplan/backups/

# Document the current network state

sudo ip -c addr | sudo tee /etc/netplan/backups/current-ip-addr.txt

sudo ip -c route | sudo tee /etc/netplan/backups/current-ip-route.txt- Review your existing Netplan so you know what to recreate

# View your current netplan configs

cat /etc/netplan/*.yaml- Now, create the corresponding systemd-networkd configuration files in

/etc/systemd/network/.- For each interface (wired, wireless, etc.) in your Netplan configuration, create a corresponding

.networkfile, with the appropriate configurations (i.e. static vs. DHCP). - Remember: For wireless connections, you need both a systemd and a wpasupplicant configuration.

- For each interface (wired, wireless, etc.) in your Netplan configuration, create a corresponding

# Create the directory if it doesn't exist

sudo mkdir -p /etc/systemd/network/

# For an Ethernet configuration

sudo nano /etc/systemd/network/20-wired.network

# For a Wireless configuration

sudo nano /etc/systemd/network/25-wireless.network

sudo nano /etc/wpa_supplicant/wpa_supplicant-wlan0.conf- Now that your networkd configuration is in place, disable Netplan.

# Ensure systemd-networkd is enabled

sudo systemctl enable systemd-networkd

sudo systemctl enable systemd-resolved

sudo systemctl start systemd-networkd

# Move the Netplan configurations to a disabled state

sudo mkdir -p /etc/netplan/disabled

sudo mv /etc/netplan/*.yaml /etc/netplan/disabled/

# Create a minimal netplan configuration that defers to systemd-networkd

sudo tee /etc/netplan/01-network-manager-all.yaml > /dev/null << EOF

network:

version: 2

renderer: networkd

EOFLet’s take a look at the systemctl enable and start commands, because without them we will lose connectivity when turning off netplan. Before we do that, however, what the minimal netplan configuration file does is essentially tell Netplan to just use networkd. We’ll remove it once we are sure everything is up and running.

- Enable Command:

sudo systemctl enable systemd-networkd: This configures systemd-networkd to start automatically when the system boots.- Behind the scenes:

- This command creates the necessary symbolic links in systemd’s unit directories so that the network daemon will be started by systemd during the boot process.

- It integrates the service into systemd’s dependency tree.

- Without this step, you would need to manually start networkd after each reboot, which is impractical for a server environment.

- Start Command:

sudo systemctl start systemd-networkd: This launches the systemd-networkd daemon immediately.- Behind the scenes:

- systemd spawns the networkd process, which then:

- Reads all .network, .netdev, and .link configuration files in

/etc/systemd/network/and/usr/lib/systemd/network/ - Applies the configurations to matching interfaces

- Sets up monitoring for network changes

- Reads all .network, .netdev, and .link configuration files in

- systemd spawns the networkd process, which then:

- Restart Command:

sudo systemctl restart systemd-networkd: This stops and then starts the daemon again, ensuring all configuration changes are applied.- Behind the scenes:

- systemd sends a termination signal to the running networkd process, waits for it to exit cleanly, and then starts a new instance.

- The new instance repeats the initialization process, reading all configuration files again.

- This is the command you’ll use most frequently when making changes to network configurations.

- Why Restart Is Necessary:

- While systemd-networkd does monitor for some changes, editing configuration files doesn’t automatically trigger a reconfiguration.

- The restart ensures that:

- All new or modified configuration files are re-read

- Any removed configurations are no longer applied

- All interface configurations are freshly evaluated against the current state

- Impact on Network Connectivity:

- A restart will temporarily disrupt network connectivity as interfaces are reconfigured

- For remote servers, use caution when restarting network services to avoid losing your connection

- For critical remote systems, consider using a command pipeline, like:

sudo systemctl restart systemd-networkd.service || (sleep 30 && sudo systemctl start systemd-networkd.service)- Which attempts to restart and then tries to start the service again after 30 seconds if connectivity is lost

- Apply the systemd-networkd network configuration

# Apply Netplan changes (this will do nothing as we now have a minimal config)

sudo netplan apply

# Restart systemd-networkd to apply our direct configuration

sudo systemctl restart systemd-networkd

# The next step is to enable and start the wpa_supplicant service.

sudo systemctl enable wpa_supplicant@wlan0.service

sudo systemctl start wpa_supplicant@wlan0.serviceThese commands are crucial for integrating wpa_supplicant with systemd, let’s break them down:

- Service Template:

- The

wpa_supplicant@wlan0.servicesyntax uses systemd’s template unit feature. - The @ symbol indicates a template service, and wlan0 is the instance name that gets passed to the template.

- This allows the same service definition to be used for different wireless interfaces.

- The

- Enable Command:

sudo systemctl enable wpa_supplicant@wlan0.service: This creates symbolic links from the system’s service definition directory to systemd’s active service directory, ensuring the service starts automatically at boot.- Behind the scenes:

- This modifies systemd’s startup configuration by adding the service to the correct target units. Typically multi-user.target.

- The symbolic links created point to the wpa_supplicant service template file.

- Start Command:

sudo systemctl start wpa_supplicant@wlan0.service: This immediately starts the service without waiting for a reboot.- Behind the scenes:

- systemd executes the wpa_supplicant binary with appropriate arguments

- Derived from the service template and the instance name (wlan0).

- The command effectively executed is similar to:

/usr/sbin/wpa_supplicant -c /etc/wpa_supplicant/wpa_supplicant-wlan0.conf -i wlan0

- Integration with systemd-networkd:

- When wpa_supplicant successfully connects to a wireless network, it brings the interface up

- systemd-networkd detects this state change through kernel events

- systemd-networkd then applies the matching network configuration (our earlier 25-wireless.network file)

- If DHCP is enabled, the DHCP client process begins

- Benefits of this systemd configuration:

- Dependency management (services can start in the correct order)

- Automatic restart if the service fails

- Standardized logging through journald

- Consistent management interface alongside other system services

- The template approach allows for modular configuration that can be easily expanded if you add more wireless interfaces to your Raspberry Pi.

- Verify the new configuration by checking the